Search the Community

Showing results for tags 'ai chips'.

-

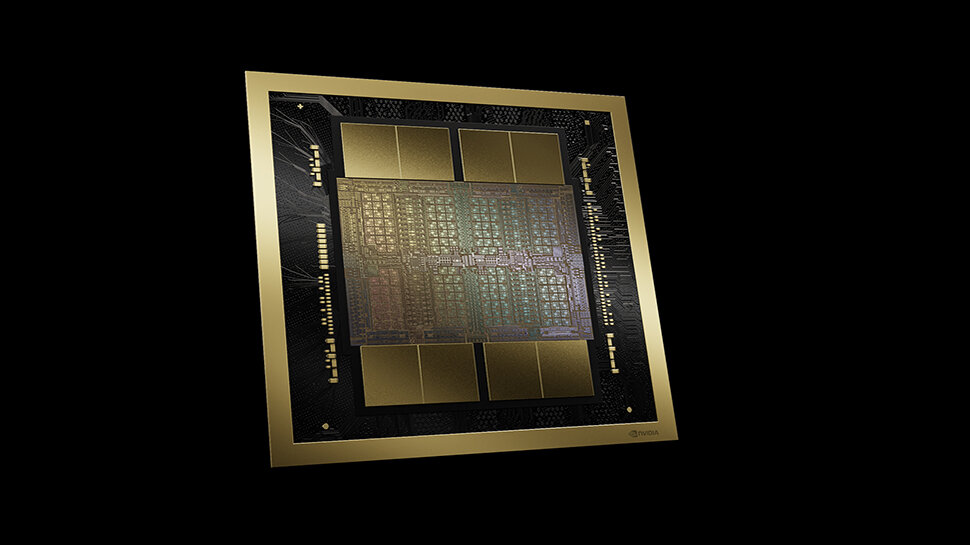

In a recent interview with CNBC's Jim Cramer, Nvidia CEO Jensen Huang shared details about the company’s upcoming Blackwell chip which cost $10 billion in research and development to create. The new GPU, which is built on a custom 4NP TSMC process and packs a total of 208 billion transistors (104 billion per die), with 192GB of HMB3e memory and 8TB/s of memory bandwidth, involved the creation of new technology because what the company was trying to achieve “went beyond the limits of physics,” Huang said. During the chat, Huang also revealed that the fist-sized Blackwell chip will sell for "between $30,000 and $40,000". That’s similar in price to the H100 which analysts say cost between $25,000 and $40,000 per chip when demand was at its peak. A big markup According to estimates by investment services firm Raymond James (via @firstadopter), Nvidia B200s will cost Nvidia in excess of $6,000 to make, compared with the estimated $3320 production costs of the H100. The actual final selling price of the GPU will vary depending on whether it's bought directly from Nvidia or through a third party seller, but customers aren’t likely to be purchasing just the chips. Nvidia has already unveiled three variations of its Blackwell AI accelerator with different memory configurations — B100, B200, and the GB200 which brings together two Nvidia B200 Tensor Core GPUs and a Grace CPU. Nvidia’s strategy, however, is geared towards selling million dollar AI supercomputers like the multi-node, liquid-cooled NVIDIA GB200 NVL72 rack-scale system, DGX B200 servers with eight Blackwell GPUs, or DGX B200 SuperPODs. More from TechRadar Pro Nvidia says its new Blackwell is set to power the next generation of AINvidia CEO Jensen Huang sees Blackwell as the power behind the new AI ageNvidia GTC 2024 — all the updates as it happened View the full article

-

Samsung is reportedly planning to launch its own AI accelerator chip, the 'Mach-1', in a bid to challenge Nvidia's dominance in the AI semiconductor market. The new chip, which will likely target edge applications with low power consumption requirements, will go into production by the end of this year and make its debut in early 2025, according to the Seoul Economic Daily. The announcement was made during the company's 55th regular shareholders' meeting. Kye Hyun Kyung, CEO of Samsung Semiconductor, said the chip design had passed technological validation on FPGAs and that finalization of SoC was in progress. Entirely new type of AGI semiconductor The Mach-1 accelerator is designed to tackle AI inference tasks and will reportedly overcome the bottleneck issues that arise in existing AI accelerators when transferring data between the GPU and memory. This often results in slower data transmission speeds and reduced power efficiency. The Mach-1 is reportedly a 'lightweight' AI chip, utilizing low-power (LP) memory instead of the costly HBM typically used in AI semiconductors. The move is widely seen as Samsung's attempt to regain its position as the world's largest semiconductor company, fighting back against Nvidia which completely dominates the AI chip market and has seen its stock soar in recent months, making it the third most valuable company in the world behind Microsoft and Apple. While the South Korean tech behemoth currently has no plans to challenge Nvidia's H100, B100, and B200 AI powerhouses, Seoul Economic Daily reports that Samsung has established an AGI computing lab in Silicon Valley to expedite the development of AI semiconductors. Kyung stated that the specialized lab is “working to create an entirely new type of semiconductor designed to meet the processing requirements of future AGI systems.’ More from TechRadar Pro Samsung to showcase the world’s fastest GDDR7 memoryCould this be the Samsung 1000 Pro SSD in disguise?IT modernization is being driven by AI, security, and sustainability View the full article

-

Meta has unveiled details about its AI training infrastructure, revealing that it currently relies on almost 50,000 Nvidia H100 GPUs to train its open source Llama 3 LLM. The company says it will have over 350,000 Nvidia H100 GPUs in service by the end of 2024, and the computing power equivalent to nearly 600,000 H100s when combined with hardware from other sources. The figures were revealed as Meta shared details on its 24,576-GPU data center scale clusters. Meta's own AI chips The company explained “These clusters support our current and next generation AI models, including Llama 3, the successor to Llama 2, our publicly released LLM, as well as AI research and development across GenAI and other areas.“ The clusters are built on Grand Teton (named after the National Park in Wyoming), an in-house-designed, open GPU hardware platform. Grand Teton integrates power, control, compute, and fabric interfaces into a single chassis for better overall performance and scalability. The clusters also feature high-performance network fabrics, enabling them to support larger and more complex models than before. Meta says one cluster uses a remote direct memory access network fabric solution based on the Arista 7800, while the other features an NVIDIA Quantum2 InfiniBand fabric. Both solutions interconnect 400 Gbps endpoints. "The efficiency of the high-performance network fabrics within these clusters, some of the key storage decisions, combined with the 24,576 NVIDIA Tensor Core H100 GPUs in each, allow both cluster versions to support models larger and more complex than that could be supported in the RSC and pave the way for advancements in GenAI product development and AI research," Meta said. Storage is another critical aspect of AI training, and Meta has developed a Linux Filesystem in Userspace backed by a version of its 'Tectonic' distributed storage solution optimized for Flash media. This solution reportedly enables thousands of GPUs to save and load checkpoints in a synchronized fashion, in addition to "providing a flexible and high-throughput exabyte scale storage required for data loading". While the company's current AI infrastructure relies heavily on Nvidia GPUs, it's unclear how long this will continue. As Meta continues to evolve its AI capabilities, it will inevitably focus on developing and producing more of its own hardware. Meta has already announced plans to use its own AI chips, called Artemis, in servers this year, and the company previously revealed it was getting ready to manufacture custom RISC-V silicon. More from TechRadar Pro Meta has done something that will get Nvidia and AMD very, very worriedMeta set to use own AI chips in its servers in 2024Intel has a new rival to Nvidia's uber-popular H100 AI GPU View the full article

-

Forum Statistics

43.9k

Total Topics43.4k

Total Posts

.png.6dd3056f38e93712a18d153891e8e0fc.png.1dbd1e5f05de09e66333e631e3342b83.png.933f4dc78ef5a5d2971934bd41ead8a1.png)