Search the Community

Showing results for tags 'stable diffusion'.

-

In our previous post, we discussed how to generate Images using Stable Diffusion on AWS. In this post, we will guide you through running LLMs for text generation in your own environment with a GPU-based instance in simple steps, empowering you to create your own solutions. Text generation, a trending focus in generative AI, facilitates a broad spectrum of language tasks beyond simple question answering. These tasks include content extraction, summary generation, sentiment analysis, text enhancement (including spelling and grammar correction), code generation, and the creation of intelligent applications like chatbots and assistants. In this tutorial, we will demonstrate how to deploy two prominent large language models (LLM) on a GPU-based EC2 instance on AWS (G4dn) using Ollama, an open source tool for downloading, managing, and serving LLM models. Before getting started, ensure you have completed our technical guide for installing NVIDIA drivers with CUDA on a G4DN instance. We will utilize Llama2 and Mistral, both strong contenders in the LLM space with open source licenses suitable for this demo. While we won’t explore the technical details of these models, it is worth noting that Mistral has shown impressive results despite its relatively small size (7 billion parameters fitting into an 8GB VRAM GPU). Conversely, Llama2 provides a range of models for various tasks, all available under open source licenses, making it well-suited for this tutorial. To experiment with question-answer models similar to ChatGPT, we will utilize the fine-tuned versions optimized for chat or instruction (Mistral-instruct and Llama2-chat), as the base models are primarily designed for text completion. Let’s get started! Step 1: Installing Ollama To begin, open an SSH session to your G4DN server and verify the presence of NVIDIA drivers and CUDA by running: nvidia-smi Keep in mind that you need to have the SSH port open, the key-pair created or assigned to the machine during creation, the external IP of the machine, and software like ssh for Linux or PuTTY for Windows to connect to the server. If the drivers are not installed, refer to our technical guide on installing NVIDIA drivers with CUDA on a G4DN instance. Once you have confirmed the GPU drivers and CUDA are set up, proceed to install Ollama. You can opt for a quick installation using their binary, or choose to clone the repository for a manual installation. To install Ollama quickly, run the following command curl -fsSL https://ollama.com/install.sh | sh Step 2: Running LLMs on Ollama Let’s start with Mistral models and view the results by running: ollama run mistral This instruction will download the Mistral model (4.1GB) and serve it, providing a prompt for immediate interaction with the model. Not a bad response for a prompt written in Spanish!. Now let’s experiment with a prompt to write code: Impressive indeed. The response is not only generated rapidly, but the code also runs flawlessly, with basic error handling and explanations. (Here’s a pro tip: consider asking for code comments, docstrings, and even test functions to be incorporated into the code). Exit with the /bye command. Now, let’s enter the same prompt with Llama2. We can see that there are immediate, notable differences. This may be due to the training data it has encountered, as it defaulted to a playful and informal chat-style response. Let’s try Llama2 using the same code prompt from above: The results of this prompt are quite interesting. Following four separate tests, it was clear that the generated responses had not only broken code but also inconsistencies within the responses themselves. It appears that writing code is not one of the out-of-the-box capabilities of Llama2 in this variant (7b parameters, although there are also versions specialized in code like Code-Llama2), but results may vary. Let’s run a final test with Code-Llama, a Llama model fine-tuned to create and explain code: We will use the same prompt from above to write the code: This time, the response is improved, with the code functioning properly and a satisfactory explanation provided. You now have the option to either continue exploring directly through this interface or start developing apps using the API. Final test: A chat-like web interface We now have something ready for immediate use. However, for some added fun, let’s install a chat-like web interface to mimic the experience of ChatGPT. For this test, we are going to use ollama-ui (https://github.com/ollama-ui/ollama-ui). ⚠︎ Please note that this project is no longer being maintained and users should transition to Open WebUI, but for the sake of simplicity, we are going to still use the Ollama-ui front-end. In your terminal window, clone the ollama-ui repository by entering the following command: git clone https://github.com/ollama-ui/ollama-ui Here’s a cool trick: when you run Ollama, it creates an API endpoint on port 11434. However, Ollama-ui will run and be accessible on port 8000, thus, we’ll need to ensure both ports are securely accessible from our machine. Since we are currently running as a development service (without the security features and performance of a production web server), we will establish an SSH tunnel for both ports. This setup will enable us to access these ports exclusively from our local computer with encrypted communication (SSL). To create the tunnel for both the web-ui and the model’s API, close your current SSH session and open a new one with the following command: ssh -L 8000:localhost:8000 -L 11434:127.0.0.1:11434 -i myKeyPair.pem ubuntu@<Machine_IP> Once the tunnel is set up, navigate to the ollama-ui directory in a new terminal and run the following command: cd ollama-ui make Next, open your local browser and go to 127.0.0.1:8000 to enjoy the chat web inRunning an LLM model for text generation on Ubuntu on AWS with a GPU instanceterface! While the interface is simple, it enables dynamic model switching, supports multiple chat sessions, and facilitates interaction beyond reliance on the terminal (aside from tunneling). This offers an alternative method for testing the models and your prompts. Final thoughts Thanks to Ollama and how simple it is to install the NVIDIA drivers on a GPU-based instance, we got a very straightforward process for running LLMs for text generation in your own environment. Additionally, Ollama facilitates the creation of custom model versions and fine-tuning, which is invaluable for developing and testing LLM-based solutions. When selecting the appropriate model for your specific use case, it is crucial to evaluate their capabilities based on architectures and the data they have been trained on. Be sure to explore fine-tuned variants such as Llama2 for code, as well as specialized versions tailored for generating Python code. Lastly, for those aiming to develop production-ready applications, remember to review the model license and plan for scalability, as a single GPU server may not suffice for multiple concurrent users. You may want to explore Amazon Bedrock, which offers easy access to various versions of these models through a simple API call or Canonical MLOps, an end-to-end solution for training and running your own ML models. Quick note regarding the model size The size of the model significantly impacts the production of better results. A larger model is more capable of reproducing better content (since it has a greater capacity to “learn”). Additionally, larger models offer a larger attention window (for “understanding” the context of the question), and allow more tokens as input (your instructions) and output (the response) As an example, Llama2 offers three main model sizes regarding the parameter number: 7, 13, or 70 billion parameters. The first model requires a GPU with a minimum of 8GB of GPU RAM, whereas the second requires a minimum of 16GB of VRAM. Let me share a final example: I will request the 7B parameters version of Llama2 to proofread an incorrect version of this simple Spanish phrase, “¿Hola, cómo estás?”, which translates to “Hi, how are you?” in English. I conducted numerous tests, all yielding incorrect results like the one displayed in the screenshot (where “óle” is not a valid word, and it erroneously suggests it means “hello”). Now, let’s test the same example with Llama2 with 13 billion parameters: While it failed to recognize that I intended to write “hola,” this outcome is significantly better as it added accents, question marks and detected that “ola” wasn’t the right word to use (if you are curious, it means “wave”) . View the full article

-

UL Solutions is introducing a Stable Diffusion benchmark to its Procyon software next week. View the full article

-

- stable diffusion

- procyon

-

(and 3 more)

Tagged with:

-

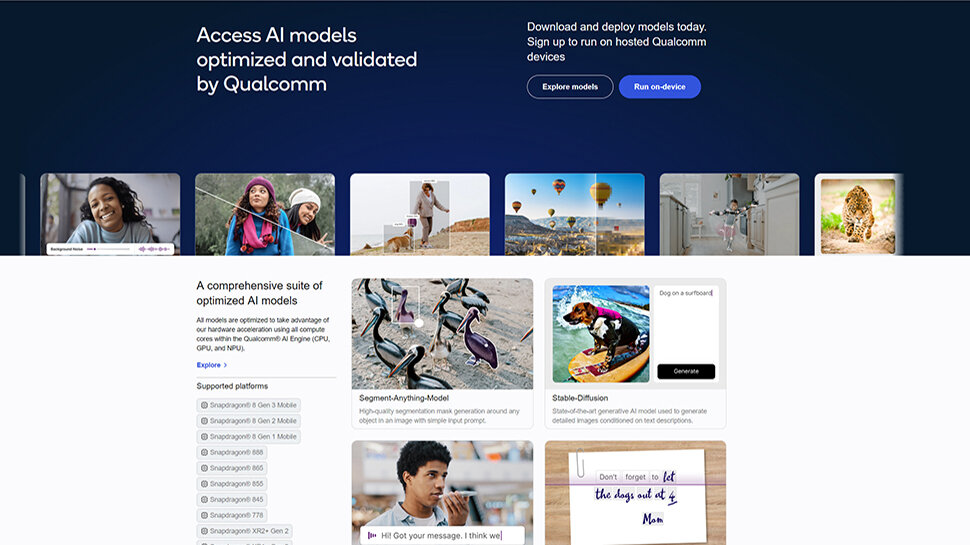

Qualcomm has unveiled its AI Hub, an all-inclusive library of pre-optimized AI models ready for use on devices running on Snapdragon and Qualcomm platforms. These models support a wide range of applications including natural language processing, computer vision, and anomaly detection, and are designed to deliver high performance with minimal power consumption, a critical factor for mobile and edge devices. The AI Hub library currently includes more than 75 popular AI and generative AI models including Whisper, ControlNet, Stable Diffusion, and Baichuan 7B. All models are bundled in various runtimes and are optimized to leverage the Qualcomm AI Engine's hardware acceleration across all cores (NPU, CPU, and GPU). According to Qualcomm, they’ll deliver four times faster inferencing times. Documentation and tutorials provided The AI Hub also handles model translation from the source framework to popular runtimes automatically. It works directly with the Qualcomm AI Engine direct SDK and applies hardware-aware optimizations. Developers can search for models based on their needs, download them, and integrate them into their applications, saving time and resources. The AI Hub also provides tools and resources for developers to customize these models, and they can fine-tune them using the Qualcomm Neural Processing SDK and the AI Model Efficiency Toolkit, both available on the platform. To use the AI Hub, developers need a trained model in PyTorch, TorchScript, ONNX, or TensorFlow Lite format, and a good understanding of the deployment target, which can be a specific device (like Samsung Galaxy S23 Ultra) or a range of devices. The AI Hub is not exclusively for experienced developers however. It also serves as a learning platform, providing comprehensive documentation and tutorials for those venturing into the world of AI. Qualcomm plans to regularly update the AI Hub with new models and support for additional platforms and operating systems. Developers can sign up to access these models on cloud-hosted devices based on Qualcomm platforms and get early access to new features and AI models. More from TechRadar Pro These are the best AI tools around todayQualcomm is now one step closer to an Arm-free ecosystemMillions of GPUs from Apple, AMD and Qualcomm have a serious security flaw View the full article

-

As generative AI and hybrid working environments become increasingly common, professionals across all industries need powerful, AI-accelerated business laptops to keep up. AI is being rapidly integrated into professional design, content creation workflows, and everyday productivity applications, highlighting the need for robust local AI acceleration and ample processing power. Nvidia wants to make AI more accessible to everyone with the launch of its new RTX 500 and RTX 1000 Ada Generation Laptop GPUs. These GPUs, tailored for professionals on the go, will be offered in portable mobile workstations, broadening the Ada Lovelace architecture-based selection. The next-gen mobile workstations, equipped with Ada Generation GPUs, will feature both an NPU and an Nvidia RTX GPU, including Tensor Cores for AI processing. This combination allows for light AI tasks to be handled by the NPU, while the GPU provides up to an additional 682 TOPS of AI performance for more demanding workflows. Optimized for AI workloads The new RTX 500 GPU reportedly offers up to 14x the generative AI performance for models like Stable Diffusion, up to 3x faster photo editing with AI, and up to 10x the graphics performance for 3D rendering compared to a CPU-only configuration. The RTX 500 and 1000 GPUs are designed to enhance professional workflows across industries. Nvidia suggests they will allow video editors to streamline tasks such as background noise removal with AI, graphic designers to revive blurry images with AI upscaling, and professionals to enjoy higher-quality video conferencing and streaming experiences on the go. The new Nvidia RTX 500 and 1000 Ada Generation Laptop GPUs will be available this spring in laptops from the likes of Dell, HP, Lenovo, and MSI. While there’s no doubting the appeal of the new RTX 500 and RTX 1000 laptop GPUs, it's worth considering whether the Nvidia RTX 4000 GPUs might be a better choice. They're likely to be cheaper and more powerful in a standard laptop, offering an alternative option for those looking to build an LLM. More from TechRadar Pro These are the best laptops for gaming and workUK government and others are keeping a very close eye on NvidiaNvidia's entry level GPU can handle 66 million pixels, enough to feed two 8K monitors View the full article

-

Massively popular audio editing tool Audacity is getting a major upgrade thanks to Intel. The tech giant has developed a suite of OpenVINO plugins for Audacity, bringing a number of AI-powered editing features to the software. These AI plugins, which run entirely on your PC, include noise suppression and transcription for spoken word content, and generation and separation plugins for music. Windows only... for now The noise suppression feature functions similarly to Audacity's own built-in Noise Removal effect, suppressing background noise for a clearer sound. The transcription tool, powered by Whisper.cpp, can transcribe and translate words, outputting to a label track. Users can export these transcriptions through the software's export feature. For music, the AI tools offer music generation and remixing capabilities using Stable Diffusion. The music separation feature can split a song into its vocal and instrumental parts, or into vocals, drums, bass, and a combined "anything else" part. This is ideal for creating covers and playalongs. These OpenVINO plugins are available for download now, but currently only for Windows. It's possible Linux and macOS users will get access to the plugins in a future release. In addition to these enhancements, Audio.com is eyeing the next step in its development. The company is building a creator-first platform and plans to include a cloud-saving feature that allows creators to work on Audacity projects saved directly to the cloud. This feature provides a convenient way to share projects, get instant feedback, and collaborate with others. Audio.com expects to launch a beta release of this feature very soon. While many Audio.com features will remain free, the new cloud storage feature will come at a small cost after the first five projects, to cover additional expenses incurred by the team. Audacity itself will remain 100% free and open source. More from TechRadar Pro We've rounded up the best audio editors around todayThese are the best open source programsThe future of audio data management in the digital workplace View the full article

-

Generative AI has become the number one technology of interest across many industries over the past year. Here at Google Cloud for Games, we think that online game use cases have some of the highest potential for generative AI, giving creators the power to build more dynamic games, monetize their games better, and get to market faster. As part of this, we’ve explored ways that games companies can train, deploy, and maintain GenAI utilizing Google Cloud. We'd like to walk you through what we’ve been working on, and how you can start using it in your game today. While we’ll focus on gen AI applications, the framework we’ll be discussing has been developed with all machine learning in mind, not just the generative varieties. Long term, the possibilities of gen AI in Games are endless, but in the near term, we believe the following are the most realistic and valuable to the industry over the next 1-2 years. Game productionAdaptive gameplayIn-game advertisingEach of these helps with a core part of the game development and publishing process. Generative AI in game production, mainly in the development of 2D textures, 3D assets, and code, can help decrease the effort to create a new game, decrease the time to market, and help make game developers more effective overall. Thinking towards sustaining player engagement and monetizing existing titles, ideas like adaptive dialogue and gameplay can keep players engaged, and custom in-game objects can keep them enticed. In-game advertising opens a new realm of monetization, and allows us not only the ability to hyper-personalize ads to views, but to personalize their placement and integration into the game, creating seamless ad experiences that optimize views and engagement. If you think about the time to produce a small game, never mind a AAA blockbuster, development of individual game assets consumes an immense amount of time. If generative models can help reduce developer toil and increase the productivity of studio development teams by even a fraction, it could represent a faster time to market and better games for us all. As part of this post, we introduce our Generative AI Framework for Games, which provides templates for running gen AI for games on Google Cloud, as well as a framework for data ingest and storage to support these live models. We walk you through a demo of this framework below, where we specifically show two cases around image generation and code generation in a sample game environment. But before we jump into what we’re doing here at Google Cloud, let’s first tackle a common misconception about machine learning in games. Cloud-based ML plus live services games are a goIt’s a common refrain that running machine learning in the cloud for live game services is either cost prohibitive or prohibitive in terms of the induced latency that the end user experiences. Live games have always run on a client-server paradigm, and it’s often preferable that compute-intensive processes that don’t need to be authoritative run on the client. While this is a great deployment pattern for some models and processes, it’s not the only one. Cloud-based gen AI, or really any form of AI/ML, is not only possible, but can result in significantly decreased toil for developers, and reduced maintenance costs for publishers, all while supporting the latencies needed for today’s live games. It’s also safer — cloud-based AI safeguards your models from attacks, manipulation, and fraud. Depending on your studio’s setup, Google Cloud can support complete in-cloud or hybrid deployments of generative models for adaptive game worlds. Generally, we recommend two approaches depending on your technology stack and needs; If starting from scratch, we recommend utilizing Vertex AI’s Private Endpoints for low latency serving, which can work whether you are looking for a low ops solution, or are running a service that does not interact with a live game environment.If running game servers on Google Cloud, especially if they are on Google Kubernetes Engine (GKE), and are looking to utilize that environment for ultra-low latency serving, we recommend deploying your models on GKE alongside your game server.Let’s start with Vertex AI. Vertex AI supports both public and private endpoints, although for games, we generally recommend utilizing Private Endpoints to achieve the appropriate latencies. Vertex AI models utilize what we call an adaptor layer, which has two advantages: you don’t need to call the entire model when making a prediction, and any fine tuning conducted by you, the developer, is contained in your tenant. Compared to running a model yourself, whether in the cloud or on prem, this negates the need to handle enormous base models and the relevant serving and storage infrastructure to support them. As mentioned, we’ll show both of these in the demo below. If you’re already running game servers on GKE, you can gain a lot of benefit from running both proprietary and open-source machine learning models on GKE as well as taking advantage of GKE’s native networking. With GKE Autopilot, our tests indicate that you can achieve prediction performance in the sub-ms range when deployed alongside your game servers. Over the public internet, we’ve achieved low millisecond latencies that are consistent, if not better, with what we have seen in classic client side deployments. If you’re afraid of the potential cost implications of running on GKE, think again — the vast majority of gaming customers see cost savings from deploying on GKE, alongside a roughly 30% increase in developer productivity. If you manage both your machine learning deployments and your game servers with GKE Autopilot, there’s also a significant reduction in operational burden. In our testing, we’ve found that whether you are deploying models on Vertex or GKE, the cost is roughly comparable. Unified data platforms enable real-time AIAI/ML driven personalization thrives on large amounts of data regarding player preferences, gameplay, and the game’s world and lore. As part of our efforts in gen AI in games, we’ve developed a data pipeline and database template that utilizes the best of Google Cloud to ensure consistency and availability. Live games require strong consistency, and models,whether generative or not, require the most up-to-date information about a player and their habits. Periodic retraining is necessary to keep models fresh and safe, and globally available databases like Spanner and BigQuery ensure that the data being fed into models, generative or otherwise, is kept fresh and secure. In many current games, users are fragmented by maps/realms, with hard lines between them, keeping experiences bounded by firm decisions and actions. As games move towards models where users inhabit singular realms, these games will require a single, globally available data store. In-game personalization also requires the live status of player activity. A strong data pipeline and data footprint is just as important for running machine learning models in a liveops environment as the models themselves. Considering the complexity of frequent model updates across a self-managed data center footprint, we maintain it’s a lighter lift to manage the training, deployment, and overall maintenance of models in the cloud. By combining a real-time data pipeline with generative models, we can also inform model prompts about player preferences, or combine them with other models that track where, when, and why to personalize the game state. In terms of what is available today, this could be anything from pre-generated 3D meshes that are relevant to the user, retexturing meshes to different colors, patterns or lighting to match player preferences or mood, or even the giving the player the ability to fully customize the game environment based natural language. All of this is in service of keeping our players happy and engaged with the game. Demoing capabilitiesLet’s jump into the framework. For the demo, we’ll be focusing on how Google Cloud’s data, AI, and compute technology can come together to provide real-time personalization of the game state. The framework includes: Unity for the client and serverOpen source: TerraformAgonesGoogle Cloud: GKEVertex AIPub/SubDataflowSpannerBigQueryAs part of this framework, we created an open-world demo game in Unity that uses assets from the Unity store. We designed this to be an open world game — one where the player needs to interact with NPCs and is guided through dynamic billboards that assist the player in achieving the game objective.. This game is running on GKE with Agones, and is designed to support multiple players. For simplicity, we focus on one player and their actions. Generative AI in Games - Demo Game Now, back to the framework. Our back-end Spanner database contains information on the player and their past actions. We also have data on their purchasing habits across this make-believe game universe, with a connection to the Google Marketing Platform. This allows us in our demo game to start collecting universal player data across platforms. Spanner is our transactional database, and BigQuery is our analytical database, and data flows freely between them. As part of this framework, we trained recommendation models in Vertex AI utilizing everything we know about the player, so that we can personalize in-game offers and advertising. For the sake of this demo, we’ll forget about those models for a moment, and focus on two generative AI use cases: image generation, NPC chat, and code generation for our adaptive gameplay use case. To show you both deployment patterns that we recommend for games, deploying on GKE alongside the game server, and utilizing Vertex AI. For image generation, we host an open-source Stable Diffusion model on GKE, and for code generation and NPC chat we’re using the gemini-pro model within Vertex AI. In cases where textures need to be modified or game objects are repositioned, we are using the Gemini LLM to generate code that can render, position, and configure prefabs within the game environment. As the character walks through the game, we adaptively show images to suggest potential next moves and paths for the player. In practice, these could be game-themed images or even advertisements. In our case, we display images that suggest what the player should be looking for to progress game play. Generative AI in Games - Demo Game In the example above, the player is shown a man surrounded by books, which provides a hint to the player that maybe they need to find a library as their next objective. That hint also aligns with the riddle that the NPC shared earlier in the game. If a player interacts with one of these billboards, which may mean moving closer to it or even viewing the billboard for a preset time, then the storyline of our game adapts to that context. We can also load and configure prefabs on the fly with code generation. Below, you’ll see our environment as is, and we ask the NPC to change the bus color to yellow, which dynamically updates the bus color and texture. Generative AI in Games - Demo Game Once we make the request, either by text or speech, Google Cloud GenAI models generate the exact code needed to update the prefab in the environment, and then renders it live in the game. While this example shows how code generation can be used in-game, game developers can also use a similar process to place and configure game objects within their game environment to speed up game development. If you would like to take the next step and check out the technology, then we encourage you to explore the Github link and resources below. Additionally, we understand that not everyone will be interested in every facet of the framework. That's why we've made it flexible – whether you want to dive into the entire project or just work with specific parts of the code to understand how we implemented a certain feature, the choice is yours. If you're looking to deepen your understanding of Google Cloud generative AI, check out this curated set of resources that can help: Generative AI on Google CloudGetting started with generative AI on VertexLast but not least, if you’re interested in working with the project or would like to contribute to it, feel free to explore the code on Github, which focuses on the GenAI services used as part of this demo: Generative AI Quickstart for GamingView the full article

-

It was only a matter of time before someone added generative AI to an AR headset and taking the plunge is start-up company Brilliant Labs with their recently revealed Frame smart glasses. Looking like a pair of Where’s Waldo glasses (or Where’s Wally to our UK readers), the Frame houses a multimodal digital assistant called Noa. It consists of multiple AI models from other brands working together in unison to help users learn about the world around them. These lessons can be done just by looking at something and then issuing a command. Let’s say you want to know more about the nutritional value of a raspberry. Thanks to OpenAI tech, you can command Noa to perform a “visual analysis” of the subject. The read-out appears on the outer AR lens. Additionally, it can offer real-time language translation via Whisper AI. The Frame can also search the internet via its Perplexity AI model. Search results will even provide price tags for potential purchases. In a recent VentureBeat article, Brilliant Labs claims Noa can provide instantaneous price checks for clothes just by scanning the piece, or fish out home listings for new houses on the market. All you have to do is look at the house in question. It can even generate images on the fly through Stable Diffusion, according to ZDNET. Evolving assistant Going back to VentureBeat, their report offers a deeper insight into how Noa works. The digital assistant is always on, constantly taking in information from its environment. And it’ll apparently “adopt a unique personality” over time. The publication explains that upon activating for the first time, Noa appears as an “egg” on the display. Owners will have to answer a series of questions, and upon finishing, the egg hatches into a character avatar whose personality reflects the user. As the Frame is used, Noa analyzes the interactions between it and the user, evolving to become better at tackling tasks. (Image credit: Brilliant Labs) An exploded view of the Frame can be found on Brilliant Labs’ official website providing interesting insight into how the tech works. On-screen content is projected by a micro-OLED onto a “geometric prism” in the lens. 9To5Google points out this is reminiscent of how Google Glass worked. On the nose bridge is the Frame’s camera sitting on a PCBA (printed circuit board assembly). At the end of the stems, you have the batteries inside two big hubs. Brilliant Labs states the frames can last a whole day, and to charge them, you’ll have to plug in the Mister Power dongle, inadvertently turning the glasses into a high-tech Groucho Marx impersonation. (Image credit: Brilliant Labs) Availability Currently open for pre-order, the Frame will run you $350 a pair. It’ll be available in three colors: Smokey Black, Cool Gray, and the transparent H20. You can opt for prescription lenses. Doing so will bump the price tag to $448.There's a chance Brilliant Labs won’t have your exact prescription. They recommend to instead select the option that closely matches your actual prescription. Shipping is free and the first batch rolls out April 15. It appears all of the AI features are subject to a daily usage cap. Brilliant Labs has plans to launch a subscription service lifting the limit. We reached out to the company for clarification and asked several other questions like exactly how does the Frame receive input? This story will be updated at a later time. Until then, check out TechRadar's list of the best VR headsets for 2024. You might also like Ray-Ban Meta Smart Glasses review: the wearable AI future isn't here yetThe Ray-Ban Meta Smart Glasses are getting a welcome camera and audio updateI tried the smart glasses that could replace your phone – here's what I learned View the full article

-

genai Build Multimodal GenAI Apps with OctoAI and Docker

Docker posted a topic in Artificial Intelligence

This post was contributed by Thierry Moreau, co-founder and head of DevRel at OctoAI. Generative AI models have shown immense potential over the past year with breakthrough models like GPT3.5, DALL-E, and more. In particular, open source foundational models have gained traction among developers and enterprise users who appreciate how customizable, cost-effective, and transparent these models are compared to closed-source alternatives. In this article, we’ll explore how you can compose an open source foundational model into a streamlined image transformation pipeline that lets you manipulate images with nothing but text to achieve surprisingly good results. With this approach, you can create fun versions of corporate logos, bring your kids’ drawings to life, enrich your product photography, or even remodel your living room (Figure 1). Figure 1: Examples of image transformation including, from left to right: Generating creative corporate logo, bringing children’s drawings to life, enriching commercial photography, remodeling your living room Pretty cool, right? Behind the scenes, a lot needs to happen, and we’ll walk step by step through how to reproduce these results yourself. We call the multimodal GenAI pipeline OctoShop as a nod to the popular image editing software. Feeling inspired to string together some foundational GenAI models? Let’s dive into the technology that makes this possible. Architecture overview Let’s look more closely at the open source foundational GenAI models that compose the multimodal pipeline we’re about to build. Going forward, we’ll use the term “model cocktail” instead of “multimodal GenAI model pipeline,” as it flows a bit better (and sounds tastier, too). A model cocktail is a mix of GenAI models that can process and generate data across multiple modalities: text and images are examples of data modalities across which GenAI models consume and produce data, but the concept can also extend to audio and video (Figure 2). To build on the analogy of crafting a cocktail (or mocktail, if you prefer), you’ll need to mix ingredients, which, when assembled, are greater than the sum of their individual parts. Figure 2: The multimodal GenAI workflow — by taking an image and text, this pipeline transforms the input image according to the text prompt. Let’s use a Negroni, for example — my favorite cocktail. It’s easy to prepare; you need equal parts of gin, vermouth, and Campari. Similarly, our OctoShop model cocktail will use three ingredients: an equal mix of image-generation (SDXL), text-generation (Mistral-7B), and a custom image-to-text generation (CLIP Interrogator) model. The process is as follows: CLIP Interrogator takes in an image and generates a textual description (e.g., “a whale with a container on its back”). An LLM model, Mistral-7B, will generate a richer textual description based on a user prompt (e.g., “set the image into space”). The LLM will consequently transform the description into a richer one that meets the user prompt (e.g., “in the vast expanse of space, a majestic whale carries a container on its back”). Finally, an SDXL model will be used to generate a final AI-generated image based on the textual description transformed by the LLM model. We also take advantage of SDXL styles and a ControlNet to better control the output of the image in terms of style and framing/perspective. Prerequisites Let’s go over the prerequisites for crafting our cocktail. Here’s what you’ll need: Sign up for an OctoAI account to use OctoAI’s image generation (SDXL), text generation (Mistral-7B), and compute solutions (CLIP Interrogator) — OctoAI serves as the bar from which to get all of the ingredients you’ll need to craft your model cocktail. If you’re already using a different compute service, feel free to bring that instead. Run a Jupyter notebook to craft the right mix of GenAI models. This is your place for experimenting and mixing, so this will be your cocktail shaker. To make it easy to run and distribute the notebook, we’ll use Google Colab. Finally, we’ll deploy our model cocktail as a Streamlit app. Think of building your app and embellishing the frontend as the presentation of your cocktail (e.g., glass, ice, and choice of garnish) to enhance your senses. Getting started with OctoAI Head to octoai.cloud and create an account if you haven’t done so already. You’ll receive $10 in credits upon signing up for the first time, which should be sufficient for you to experiment with your own workflow here. Follow the instructions on the Getting Started page to obtain an OctoAI API token — this will help you get authenticated whenever you use the OctoAI APIs. Notebook walkthrough We’ve built a Jupyter notebook in Colab to help you learn how to use the different models that will constitute your model cocktail. Here are the steps to follow: 1. Launch the notebook Get started by launching the following Colab notebook. There’s no need to change the runtime type or rely on a GPU or TPU accelerator — all we need is a CPU here, given that all of the AI heavy-lifting is done on OctoAI endpoints. 2. OctoAI SDK setup Let’s get started by installing the OctoAI SDK. You’ll use the SDK to invoke the different open source foundational models we’re using, like SDXL and Mistral-7B. You can install through pip: # Install the OctoAI SDK !pip install octoai-sdk In some cases, you may get a message about pip packages being previously imported in the runtime, causing an error. If that’s the case, selecting the Restart Session button at the bottom should take care of the package versioning issues. After this, you should be able to re-run the cell that pip-installs the OctoAI SDK without any issues. 3. Generate images with SDXL You’ll first learn to generate an image with SDXL using the Image Generation solution API. To learn more about what each parameter does in the code below, check out OctoAI’s ImageGenerator client. In particular, the ImageGenerator API takes several arguments to generate an image: Engine: Lets you choose between versions of Stable Diffusion models, such as SDXL, SD1.5, and SSD. Prompt: Describes the image you want to generate. Negative prompt: Describes the traits you want to avoid in the final image. Width, height: The resolution of the output image. Num images: The number of images to generate at once. Sampler: Determines the sampling method used to denoise your image. If you’re not familiar with this process, this article provides a comprehensive overview. Number of steps: Number of denoising steps — the more steps, the higher the quality, but generally going past 30 will lead to diminishing returns. Cfg scale: How closely to adhere to the image description — generally stays around 7-12. Use refiner: Whether to apply the SDXL refiner model, which improves the output quality of the image. Seed: A parameter that lets you control the reproducibility of image generation (set to a positive value to always get the same image given stable input parameters). Note that tweaking the image generation parameters — like number of steps, number of images, sampler used, etc. — affects the amount of GPU compute needed to generate an image. Increasing GPU cycles will affect the pricing of generating the image. Here’s an example using simple parameters: # To use OctoAI, we'll need to set up OctoAI to use it from octoai.clients.image_gen import Engine, ImageGenerator # Now let's use the OctoAI Image Generation API to generate # an image of a whale with a container on its back to recreate # the moby logo image_gen = ImageGenerator(token=OCTOAI_API_TOKEN) image_gen_response = image_gen.generate( engine=Engine.SDXL, prompt="a whale with a container on its back", negative_prompt="blurry photo, distortion, low-res, poor quality", width=1024, height=1024, num_images=1, sampler="DPM_PLUS_PLUS_2M_KARRAS", steps=20, cfg_scale=7.5, use_refiner=True, seed=1 ) images = image_gen_response.images # Display generated image from OctoAI for i, image in enumerate(images): pil_image = image.to_pil() display(pil_image) Feel free to experiment with the parameters to see what happens to the resulting image. In this case, I’ve put in a simple prompt meant to describe the Docker logo: “a whale with a container on its back.” I also added standard negative prompts to help generate the style of image I’m looking for. Figure 3 shows the output: Figure 3: An SDXL-generated image of a whale with a container on its back. 4. Control your image output with ControlNet One thing you may want to do with SDXL is control the composition of your AI-generated image. For example, you can specify a specific human pose or control the composition and perspective of a given photograph, etc. For our experiment using Moby (the Docker mascot), we’d like to get an AI-generated image that can be easily superimposed onto the original logo — same shape of whale and container, orientation of the subject, size, and so forth. This is where ControlNet can come in handy: they let you constrain the generation of images by feeding a control image as input. In our example we’ll feed the image of the Moby logo as our control input. By tweaking the following parameters used by the ImageGenerator API, we are constraining the SDXL image generation with a control image of Moby. That control image will be converted into a depth map using a depth estimation model, then fed into the ControlNet, which will constrain SDXL image generation. # Set the engine to controlnet SDXL engine="controlnet-sdxl", # Select depth controlnet which uses a depth map to apply # constraints to SDXL controlnet="depth_sdxl", # Set the conditioning scale anywhere between 0 and 1, try different # values to see what they do! controlnet_conditioning_scale=0.3, # Pass in the base64 encoded string of the moby logo image controlnet_image=image_to_base64(moby_image), Now the result looks like it matches the Moby outline a lot more closely (Figure 4). This is the power of ControlNet. You can adjust the strength by varying the controlnet_conditioning_scale parameter. This way, you can make the output image more or less faithfully match the control image of Moby. Figure 4: Left: The Moby logo is used as a control image to a ControlNet. Right: the SDXL-generated image resembles the control image more closely than in the previous example. 5. Control your image output with SDXL style presets Let’s add a layer of customization with SDXL styles. We’ll use the 3D Model style preset (Figure 5). Behind the scenes, these style presets are adding additional keywords to the positive and negative prompts that the SDXL model ingests. Figure 5: You can try various styles on the OctoAI Image Generation solution UI — there are more than 100 to choose from, each delivering a unique feel and aesthetic. Figure 6 shows how setting this one parameter in the ImageGenerator API transforms our AI-generated image of Moby. Go ahead and try out more styles; we’ve generated a gallery for you to get inspiration from. Figure 6: SDXL-generated image of Moby with the “3D Model” style preset applied. 6. Manipulate images with Mistral-7B LLM So far we’ve relied on SDXL, which does text-to-image generation. We’ve added ControlNet in the mix to apply a control image as a compositional constraint. Next, we’re going to layer an LLM into the mix to transform our original image prompt into a creative and rich textual description based on a “transformation prompt.” Basically, we’re going to use an LLM to make our prompt better automatically. This will allow us to perform image manipulation using text in our OctoShop model cocktail pipeline: Take a logo of Moby: Set it into an ultra-realistic photo in space. Take a child’s drawing: Bring it to life in a fantasy world. Take a photo of a cocktail: Set it on a beach in Italy. Take a photo of a living room: Transform it into a staged living room in a designer house. To achieve this text-to-text transformation, we will use the LLM user prompt as follows. This sets the original textual description of Moby into a new setting: the vast expanse of space. ''' Human: set the image description into space: “a whale with a container on its back” AI: ''' We’ve configured the LLM system prompt so that LLM responses are concise and at most one sentence long. We could make them longer, but be aware that the prompt consumed by SDXL has a 77-token context limit. You can read more on the text generation Python SDK and its Chat Completions API used to generate text: Model: Lets you choose out of selection of foundational open source models like Mixtral, Mistral, Llama2, Code Llama (the selection will grow with more open source models being released). Messages: Contains a list of messages (system and user) to use as context for the completion. Max tokens: Enforces a hard limit on output tokens (this could cut a completion response in the middle of a sentence). Temperature: Lets you control the creativity of your answer: with a higher temperature, less likely tokens can be selected. The choice of model, input, and output tokens will influence pricing on OctoAI. In this example, we’re using the Mistral-7B LLM, which is a great open source LLM model that really packs a punch given its small parameter size. Let’s look at the code used to invoke our Mistral-7B LLM: # Let's go ahead and start with the original prompt that we used in our # image generation examples. image_desc = "a whale with a container on its back" # Let's then prepare our LLM prompt to manipulate our image llm_prompt = ''' Human: set the image description into space: {} AI: '''.format(image_desc) # Now let's use an LLM to transform this craft clay rendition # of Moby into a fun scify universe from octoai.client import Client client = Client(OCTOAI_API_TOKEN) completion = client.chat.completions.create( messages=[ { "role": "system", "content": "You are a helpful assistant. Keep your responses short and limited to one sentence." }, { "role": "user", "content": llm_prompt } ], model="mistral-7b-instruct-fp16", max_tokens=128, temperature=0.01 ) # Print the message we get back from the LLM llm_image_desc = completion.choices[0].message.content print(llm_image_desc) Here’s the output: Our LLM has created a short yet imaginative description of Moby traveling through space. Figure 7 shows the result when we feed this LLM-generated textual description into SDXL. Figure 7: SDXL-generated image of Moby where we used an LLM to set the scene in space and enrich the text prompt. This image is great. We can feel the immensity of space. With the power of LLMs and the flexibility of SDXL, we can take image creation and manipulation to new heights. And the great thing is, all we need to manipulate those images is text; the GenAI models do the rest of the work. 7. Automate the workflow with AI-based image labeling So far in our image transformation pipeline, we’ve had to manually label the input image to our OctoShop model cocktail. Instead of just passing in the image of Moby, we had to provide a textual description of that image. Thankfully, we can rely on a GenAI model to perform text labeling tasks: CLIP Interrogator. Think of this task as the reverse of what SDXL does: It takes in an image and produces text as the output. To get started, we’ll need a CLIP Interrogator model running behind an endpoint somewhere. There are two ways to get a CLIP Interrogator model endpoint on OctoAI. If you’re just getting started, we recommend the simple approach, and if you feel inspired to customize your model endpoint, you can use the more advanced approach. For instance, you may be interested in trying out the more recent version of CLIP Interrogator. You can now invoke the CLIP Interrogator model in a few lines of code. We’ll use the fast interrogator mode here to get a label generated as quickly as possible. # Let's go ahead and invoke the CLIP interrogator model # Note that under a cold start scenario, you may need to wait a minute or two # to get the result of this inference... Be patient! output = client.infer( endpoint_url=CLIP_ENDPOINT_URL+'/predict', inputs={ "image": image_to_base64(moby_image), "mode": "fast" } ) # All labels clip_labels = output["completion"]["labels"] print(clip_labels) # Let's get just the top label top_label = clip_labels.split(',')[0] print(top_label) The top label described our Moby logo as: That’s pretty on point. Now that we’ve tested all ingredients individually, let’s assemble our model cocktail and test it on interesting use cases. 8. Assembling the model cocktail Now that we have tested our three models (CLIP interrogator, Mistral-7B, SDXL), we can package them into one convenient function, which takes the following inputs: An input image that will be used to control the output image and also be automatically labeled by our CLIP interrogator model. A transformation string that describes the transformation we want to apply to the input image (e.g., “set the image description in space”). A style string which lets us better control the artistic output of the image independently of the transformation we apply to it (e.g., painterly style vs. cinematic). The function below is a rehash of all of the code we’ve introduced above, packed into one function. def genai_transform(image: Image, transformation: str, style: str) -> Image: # Step 1: CLIP captioning output = client.infer( endpoint_url=CLIP_ENDPOINT_URL+'/predict', inputs={ "image": image_to_base64(image), "mode": "fast" } ) clip_labels = output["completion"]["labels"] top_label = clip_labels.split(',')[0] # Step 2: LLM transformation llm_prompt = ''' Human: {}: {} AI: '''.format(transformation, top_label) completion = client.chat.completions.create( messages=[ { "role": "system", "content": "You are a helpful assistant. Keep your responses short and limited to one sentence." }, { "role": "user", "content": llm_prompt } ], model="mistral-7b-instruct-fp16", max_tokens=128, presence_penalty=0, temperature=0.1, top_p=0.9, ) llm_image_desc = completion.choices[0].message.content # Step 3: SDXL+controlnet transformation image_gen_response = image_gen.generate( engine="controlnet-sdxl", controlnet="depth_sdxl", controlnet_conditioning_scale=0.4, controlnet_image=image_to_base64(image), prompt=llm_image_desc, negative_prompt="blurry photo, distortion, low-res, poor quality", width=1024, height=1024, num_images=1, sampler="DPM_PLUS_PLUS_2M_KARRAS", steps=20, cfg_scale=7.5, use_refiner=True, seed=1, style_preset=style ) images = image_gen_response.images # Display generated image from OctoAI pil_image = images[0].to_pil() return top_label, llm_image_desc, pil_image Now you can try this out on several images, prompts, and styles. Package your model cocktail into a web app Now that you’ve mixed your unique GenAI cocktail, it’s time to pour it into a glass and garnish it, figuratively speaking. We built a simple Streamlit frontend that lets you deploy your unique OctoShop GenAI model cocktail and share the results with your friends and colleagues (Figure 8). You can check it on GitHub. Follow the README instructions to deploy your app locally or get it hosted on Streamlit’s web hosting services. Figure 8: The Streamlit app transforms images into realistic renderings in space — all thanks to the magic of GenAI. We look forward to seeing what great image-processing apps you come up with. Go ahead and share your creations on OctoAI’s Discord server in the #built_with_octo channel! If you want to learn how you can put OctoShop behind a Discord Bot or build your own model containers with Docker, we also have instructions on how to do that from an AI/ML workshop organized by OctoAI at DockerCon 2023. About OctoAI OctoAI provides infrastructure to run GenAI at scale, efficiently, and robustly. The model endpoints that OctoAI delivers to serve models like Mixtral, Stable Diffusion XL, etc. all rely on Docker to containerize models and make them easier to serve at scale. If you go to octoai.cloud, you’ll find three complementary solutions that developers can build on to bring their GenAI-powered apps and pipelines into production. Image Generation solution exposes endpoints and APIs to perform text to image, image to image tasks built around open source foundational models such as Stable Diffusion XL or SSD. Text Generation solution exposes endpoints and APIs to perform text generation tasks built around open source foundational models, such as Mixtral/Mistral, Llama2, or CodeLlama. Compute solution lets you deploy and manage any dockerized model container on capable OctoAI cloud endpoints to power your demanding GenAI needs. This compute service complements the image generation and text generation solutions by exposing infinite programmability and customizability for AI tasks that are not currently readily available on either the image generation or text generation solutions. Disclaimer OctoShop is built on the foundation of CLIP Interrogator and SDXL, and Mistral-7B and is therefore likely to carry forward the potential dangers inherent in these base models. It’s capable of generating unintended, unsuitable, offensive, and/or incorrect outputs. We therefore strongly recommend exercising caution and conducting comprehensive assessments before deploying this model into any practical applications. This GenAI model workflow doesn’t work on people as it won’t preserve their likeness; the pipeline works best on scenes, objects, or animals. Solutions are available to address this problem, such as face mapping techniques (also known as face swapping), which we can containerize with Docker and deploy on OctoAI Compute solution, but that’s something to cover in another blog post. Conclusion This article covered the fundamentals of building a GenAI model cocktail by relying on a combination of text generation, image generation, and compute solutions powered by the portability and scalability enabled by Docker containerization. If you’re interested in learning more about building these kinds of GenAI model cocktails, check out the OctoAI demo page or join OctoAI on Discord to see what people have been building. Acknowledgements The authors acknowledge Justin Gage for his thorough review, as well as Luis Vega, Sameer Farooqui, and Pedro Toruella for their contributions to the DockerCon AI/ML Workshop 2023, which inspired this article. The authors also thank Cia Bodin and her daughter Ada for the drawing used in this blog post. Learn more Watch the DockerCon 2023 Docker for ML, AI, and Data Science workshop. Get the latest release of Docker Desktop. Vote on what’s next! Check out our public roadmap. Have questions? The Docker community is here to help. New to Docker? Get started. View the full article-

- multimodal

- ai apps

-

(and 3 more)

Tagged with:

-

We recently published a technical document showing how to install NVIDIA drivers on a G4DN instance on AWS, where we covered not only how to install the NVIDIA GPU drivers but also how to make sure to get CUDA working for any ML work. In this document we are going to run one of the most used generative AI models, Stable Diffusion, on Ubuntu on AWS for research and development purposes. According to AWS, “G4dn instances, powered by NVIDIA T4 GPUs, are the lowest cost GPU-based instances in the cloud for machine learning inference and small scale training. (…) optimized for applications using NVIDIA libraries such as CUDA, CuDNN, and NVENC.” G4DN instances come in different configurations: Instance typevCPUsRAMGPUsg4dn.xlarge4161g4dn.2xlarge8321g4dn.4xlarge16641g4dn.8xlarge321281g4dn.12xlarge481924g4dn.16xlarge642561g4dn.metal963848 For this exercise, we will be using the g4dn.xlarge instance, since we need only 1 GPU, and with 4 vCPUs and 16GB of RAM, it will provide sufficient resources for our needs, as the GPU will handle most of the workload. Image generation with Stable Diffusion Stable Diffusion is a deep learning model released in 2022 that has been trained to transform text into images using latent diffusion techniques. Developed by Stability.AI, this groundbreaking technology not only provides open-source access to its trained weights but also has the ability to run on any GPU with just 4GB of RAM, making it one of the most used Generative AI models for image generation. In addition to its primary function of text-to-image generation, Stable Diffusion can also be used for tasks such as image retouching and video generation. The license for Stable Diffusion permits both commercial and non-commercial use, making it a versatile tool for various applications. Requirements You’ll need SSH access. If running on Ubuntu or any other Linux distribution, opening a terminal and typing ssh will get you there. If running windows, you will need either WSL (to run a Linux shell inside windows) or PuTTY to connect to the machine using an external software. Make sure you have NVIDIA Drivers and CUDA installed on your G4DN machine. Test with the following command: nvidia-smi You should be able to see the driver and CUDA versions as shown here: Let’s get started! Step 1: Create a python virtual environment: First, we need to download some libraries and dependencies as shown below: sudo apt-get install -y python3.10-venv sudo apt-get install ffmpeg libsm6 libxext6 -y Now we can create the Python environment. python3 -m venv myvirtualenv And finally, we need to activate it. Please note that every time we log in into the machine, we will need to reactivate it with the following line: source myvirtualenv/bin/activate Step 2: Download the web GUI and get a model. To interact with the model easily, we are going to clone the Stable Diffusion WebUI from AUTOMATIC1111. git clone https://github.com/AUTOMATIC1111/stable-diffusion-webui.git After cloning the repository, we can move on to the interesting part: choosing and downloading a Stable Diffusion model from the web. There are many versions and variants that can make the journey more complicated but more interesting as a learning experience. As you delve deeper, you will find that sometimes you need specific versions, fine-tuned or specialized releases for your purpose. This is where HuggingFace is great, as they host a plethora of models and checkpoint versions that you can download. Please be mindful of the license model of each model you will be using. Go to Hugging Face, click on models, and start searching for “Stable Diffusion”. For this exercise, we will use version 1.5 from runwayml. Go to the “Files and versions” tab and scroll down to the actual checkpoint files. Copy the link and go back to your SSH session. We will download the model using wget: cd ~/stable-diffusion-webui/models/Stable-diffusion wget https://huggingface.co/runwayml/stable-diffusion-v1-5/resolve/main/v1-5-pruned.safetensors Now that the model is installed, we can run the script that will bootstrap everything and run the Web GUI. Step 3: Run the WebUI securely and serve the model Now that we have everything in place, we will run the WebUI and serve the model. Just as a side note, since we are not installing this on a local desktop, we cannot just open the browser and enter the URL. This URL will only respond locally because of security constraints (in other words, it is not wise to open development environments to the public). Therefore, we are going to create an SSH tunnel. Exit the SSH session. If you are running on Linux (or Linux under WSL on Windows), you can create the tunnel using SSH by running the following command: ssh -L 7860:localhost:7860 -i myKeyPair.pem ubuntu@<the_machine's_external_IP> In case you are running on Windows and can’t use WSL, follow these instructions to connect via PuTTY. If everything went well, we can now access the previous URL in our local desktop browser. The entire connection will be tunneled and encrypted via SSH. In your new SSH session, enter the following commands to run the WebUI. cd ~/stable-diffusion-webui ./webui.sh The first time will take a while as it will install PyTorch and all the required dependencies. After it finishes, it will give you the following local URL: http://127.0.0.1:7860 So open your local browser and go to the following URL: http://127.0.0.1:7860 We are ready to start playing. We tested our first prompt with all the default values, and this is what we got. Quite impressive, right? Now you are ready to start generating! Final thoughts I hope this guide has been helpful in deploying the Stable Diffusion model on your own instance and has also provided you with a better understanding of how these models work and what can be achieved with generative AI. It is clear that generative AI is a powerful tool for businesses today. In our next post, we will explore how to deploy and self-host a Large Language Model, another groundbreaking AI tool. Remember, if you are looking to create a production-ready solution, there are several options available to assist you. From a security perspective, Ubuntu Pro offers support for your open source supply chain, while Charmed Kubeflow provides a comprehensive stack of services for all your machine learning needs. Additionally, AWS offers Amazon Bedrock, which simplifies the complexities involved and allows you to access these services through an API. Thank you for reading and stay tuned for more exciting AI content! View the full article

-

At CES 2024, Lenovo took the wraps off its new, impressively powerful external GPU, the ThinkBook Graphics Extension. However, it comes with a fairly major caveat - namely that it is currently only compatible with one laptop model, Lenovo’s own ThinkBook 14 i Gen 6+. Introduced at the same time, the ThinkBook 14 i Gen 6+ is a sleek, smart looking business laptop powered by an Intel Core Ultra processor. It utilizes AI PC features to optimize user experiences across various workflows including office tools, photo, video, and audio editing, and meeting collaboration. It comes with up to 32GB dual channel LPDDR5X memory, built-in Intel Arc graphics, and a large 85Wh battery. The ThinkBook Graphics Extension, or TGX as it's known for short, is an intelligent graphics solution that connects to the ThinkBook 14 via the laptop's TGX port. This port was created by Lenovo specifically to enable a high-speed connection to a desktop GPU. A powerful boost for AI tasks A product of Lenovo's collaboration with NVIDIA, the TGX supports NVIDIA GeForce RTX desktop GPUs, which can greatly boost AI computing power when paired with the laptop’s Intel Core Ultra processor. Lenovo says the combined intelligence provided by itself, NVIDIA, and Intel will offer "the perfect solution for users requiring extra power for graphics-intensive or AI-based tasks." NVIDIA RTX GPUs are known for their ability to excel at parallelized work, which makes them ideal for running generative AI models, such as Stable Diffusion accelerated by NVIDIA TensorRTTM. The TGX also serves as a convenient shared solution for hot desk settings in offices. Users can simply connect their ThinkBook laptop and utilize the extra power as and when required. While the launch of the ThinkBook Graphics Extension is great news, its current limitation to just one laptop model may disappoint a lot of potential users. Hopefully Lenovo will expand its compatibility to other devices in the future. The ThinkBook 14 i Gen 6+ and ThinkBook Graphics Extension will be available in select markets from Q2 2024, with the starting bundle price expected to be $2199. More from TechRadar Pro You will need an Nvidia GPU to achieve some of the fastest SSD speeds everLenovo and Nvidia have big plans for the future of AI for all of usLenovo quietly launched the world’s lightest laptop but it won’t sell it outside Asia View the full article

-

- lenovo

- stable diffusion

-

(and 1 more)

Tagged with:

-

Stability AI’s Stable Diffusion XL 1.0 (SDXL 1.0) foundation model is now generally available on-demand in Amazon Bedrock. SDXL 1.0 is the most advanced development in the Stable Diffusion text-to-image suite of models launched by Stability AI. The model generates images of high quality in virtually any art style and it excels at photorealism. Amazon Bedrock is a fully managed service that offers a choice of high-performing foundation models from leading AI companies, like Stability AI, along with a broad set of capabilities that provide you with the easiest way to build and scale generative AI applications with foundation models. View the full article

-

When OpenAI released ChatGPT on November 30, 2022, no one could have anticipated that the following 6 months would usher in a dizzying transformation for human society with the arrival of a new generation of artificial intelligence. Since the emergence of deep learning in the early 2010s, artificial intelligence has entered its third wave of development. The introduction of the Transformer algorithm in 2017 propelled deep learning into the era of large models. OpenAI established the GPT family based on the Decoder part of the Transformer. ChatGPT quickly gained global popularity, astonishing people with its ability to engage in coherent and deep conversations, while also revealing capabilities such as reasoning and logical thinking that reflect intelligence. Alongside the continuous development of AI pre-training with large models, ongoing innovation in Artificial Intelligence Generated Content (Generative AI) algorithms, and the increasing mainstream adoption of multimodal AI, Generative AI technologies represented by ChatGPT accelerated as the latest direction in AI development. This acceleration is driving the next era of significant growth and prosperity in AI, poised to have a profound impact on economic and social development. CEOs may find detailed advice for adopting Gen AI in my recently published article in Harvard Business Review – What CEOs Need to Know About the Costs of Adopting GenAI. Definition and Background of Generative AI Technology Generative AI refers to the production of content through artificial intelligence technology. It involves training models to generate new content that resembles the training data. In contrast to traditional AI, which mainly focuses on recognizing and predicting patterns in existing data, Generative AI emphasizes creating new, creative data. Its key principle lies in learning and understanding the distribution of data, leading to the generation of new data with similar features. This technology finds applications in various domains such as images, text, audio, and video. Among these applications, ChatGPT stands out as a notable example. ChatGPT, a chatbot application developed by OpenAI based on the GPT-3.5 model, gained massive popularity. Within just two months of its release, it garnered over 100 million monthly active users, surpassing the growth rates of all historical consumer internet applications. Generative AI technologies, represented by large language models and image generation models, have become platform-level technologies for the new generation of artificial intelligence, contributing to a leap in value across different industries. The explosion of Generative AI owes much to developments in three AI technology domains: generative algorithms, pre-training models, and multimodal technologies. Generative Algorithms: With the constant innovation in generative algorithms, AI is now capable of generating various types of content, including text, code, images, speech, and more. Generative AI marks a transition from Analytical AI, which focuses on analyzing, judging, and predicting existing data patterns, to Generative AI, which deduces and creates entirely new content based on learned data. Pre-training Models: Pre-training models, or large models, have significantly transformed the capabilities of Generative AI technology. Unlike the past where researchers had to train AI models separately for each task, pre-training large models have generalized Generative AI models and elevated their industrial applications. These large models have strong language understanding and content generation capabilities. Multimodal AI Technology: Multimodal technology enables Generative AI models to generate content across various modalities, such as converting text into images or videos. This enhances the versatility of Generative AI models. Foundational technologies of Generative AI Generative Adversarial Networks (GANs): GANs, introduced in 2014 by Ian Goodfellow and his team, are a form of generative model. They consist of two components: the Generator and the Discriminator. The Generator creates new data, while the Discriminator assesses the similarity between the generated data and real data. Through iterative training, the Generator becomes adept at producing increasingly realistic data. Variational Autoencoders (VAEs): VAEs are a probabilistic generative method. They leverage an Encoder and a Decoder to generate data. The Encoder maps input data to a distribution in a latent space, while the Decoder samples data from this distribution and generates new data. Recurrent Neural Networks (RNNs): RNNs are neural network architectures designed for sequential data processing. They possess memory capabilities to capture temporal information within sequences. In generative AI, RNNs find utility in generating sequences such as text and music. Transformer Models: The Transformer architecture relies on a Self-Attention mechanism and has achieved significant breakthroughs in natural language processing. It’s applicable in generative tasks, such as text generation and machine translation. Applications and Use Cases of Generative AI Text Generation Natural language generation is a key application of Generative AI, capable of producing lifelike natural language text. Generative AI can compose articles, stories, poetry, and more, offering new creative avenues for writers and content creators. Moreover, it can enhance intelligent conversation systems, elevating the interaction experience between users and AI. ChatGPT (short for Chat Generative Pre-trained Transformer) is an AI chatbot developed by OpenAI, introduced in November 2022. It employs a large-scale language model based on the GPT-3.5 architecture and has been trained using reinforcement learning. Currently, ChatGPT engages in text-based interactions and can perform various tasks, including automated text generation, question answering, and summarization. Image Generation Image generation stands as one of the most prevalent applications within Generative AI. Stability AI has unveiled the Stable Diffusion model, significantly reducing the technical barriers for AI-generated art through open-source rapid iteration. Consumers can subscribe to their product DreamStudio to input text prompts and generate artworks. This product has attracted over a million users across 50+ countries worldwide. Audio-Visual Creation and Generation Generative AI finds use in speech synthesis, generating realistic speech. For instance, generative models can create lifelike speech by learning human speech characteristics, suitable for virtual assistants, voice translation, and more. AIGC is also applicable to music generation. Generative AI can compose new music pieces based on given styles and melodies, inspiring musicians with fresh creative ideas. This technology aids musicians in effectively exploring combinations of music styles and elements, suitable for music composition and advertising music. Film and Gaming Generative AI can produce virtual characters, scenes, and animations, enriching creative possibilities in film and game production. Additionally, AI can generate personalized storylines and gaming experiences based on user preferences and behaviors. Scientific Research and Innovation Generative AI can explore new theories and experimental methods in fields like chemistry, biology, and physics, aiding scientists in discovering new knowledge. Additionally, it can accelerate technological innovation and development in domains like drug design and materials science. Code Generation Domain Having been trained on natural language and billions of lines of code, certain generative AI models are proficient in multiple programming languages, including Python, JavaScript, Go, Perl, PHP, Ruby, and more. They can generate corresponding code based on natural language instructions. GitHub Copilot, a collaboration between GitHub and OpenAI, is an AI code generation tool. It provides code suggestions based on naming or contextual code editing. It has been trained on billions of lines of code from publicly available repositories on GitHub, supporting most programming languages. Content Understanding and Analysis Bloomberg recently released a large language model (LLM) named BloombergGPT tailored for the financial sector. Similar to ChatGPT, it employs Transformer models and large-scale pre-training techniques for natural language processing, boasting 500 billion parameters. BloombergGPT’s pre-training dataset mainly comprises news and financial data from Bloomberg, constructing a dataset with 363 billion labels, supporting various financial industry tasks. BloombergGPT aims to enhance users’ understanding and analysis of financial data and news. It generates finance-related natural language text based on user inputs, such as news summaries, market analyses, and investment recommendations. Its applications span financial analysis, investment consulting, asset management, and more. For instance, in asset management, it can predict future stock prices and trading volumes based on historical data and market conditions, providing investment recommendations and decision support for fund managers. In financial news, BloombergGPT automatically generates news summaries and analytical reports based on market data and events, delivering timely and accurate financial information. AI Agents In April 2023, an open-source project named AutoGPT was released on GitHub. As of April 16, 2023, the project has garnered over 70K stars. AutoGPT is powered by GPT-4 and is capable of autonomously achieving any user-defined goals. When presented with a task, AutoGPT autonomously analyzes the problem, proposes an execution plan, and carries it out until the user’s requirements are met. Apart from standalone AI Agents, there’s the possibility of a ‘Virtual AI Society’ composed of multiple AI agents. GenerativeAgents, as explored in a paper titled “GenerativeAgents: Interactive Simulacra of Human Behavior” by Stanford University and Google, successfully constructed a ‘virtual town’ where 25 intelligent agents coexist. Leading business consulting firms predict that by 2030, the generative AI market size will reach $110 billion USD. Operations of Gen AI Operating GenAI involves a comprehensive approach that encompasses the entire lifecycle of GenAI models, from development to deployment and ongoing maintenance. It encompasses various aspects, including data management, model training and optimization, model deployment and monitoring, and continuous improvement. GenAI MLOps is an essential practice for ensuring the success of GenAI projects. By adopting MLOps practices, organizations can improve the reliability, scalability, maintainability, and time-to-market of their GenAI models. Canonical’s MLOps presents a comprehensive open-source solution, seamlessly integrating tools like Charmed Kubeflow, Charmed MLFlow, and Charmed Spark. This approach liberates professionals from grappling with tool compatibility issues, allowing them to concentrate on modeling. Charmed Kubeflow serves as the core of an expanding ecosystem, collaborating with other tools tailored to individual user requirements and validated across diverse platforms, including any CNCF-compliant K8s distribution and various cloud environments. Orchestrated through Juju, an open-source software operator, Charmed Kubeflow facilitates deployment, integration, and lifecycle management of applications at any scale and on any infrastructure. Professionals can selectively deploy necessary components from the bundle, reflecting the composability of Canonical’s MLOps tooling—an essential aspect when implementing machine learning in diverse environments. For instance, while Kubeflow comprises approximately 30 components, deploying just three— Isto, Seldon, and MicroK8s—suffices when operating at the edge due to distinct requirements for edge and scalable operations. View the full article

-