Search the Community

Showing results for tags 'ddr5'.

-

Ampere Computing unveiled its AmpereOne Family of processors last year, boasting up to 192 single-threaded Ampere cores, which was the highest in the industry. These chips, designed for cloud efficiency and performance, were Ampere's first product based on its new custom core leveraging internal IP, signalling a shift in the sector, according to CEO Renée James. At the time of the launch, James said, "Every few decades of compute there has emerged a driving application or use of performance that sets a new bar of what is required of performance. The current driving uses are AI and connected everything combined with our continued use and desire for streaming media. We cannot continue to use power as a proxy for performance in the data center. At Ampere, we design our products to maximize performance at a sustainable power, so we can continue to drive the future of the industry." AmpereOne-3 on its way Jeff Wittich, chief product officer at Ampere, recently spoke with The Next Platform about future generations of AmpereOne. He told the site that an updated chip, with 12 memory channels and an A2 core with improved performance, would be out later this year in keeping with the company's roadmap. This chip, which The Next Platform calls AmpereOne-2, will reportedly have a 33 percent increase in DDR5 memory controllers and up to 50 percent more memory bandwidth. However, what’s coming up beyond that, at some point in 2025, sounds the most exciting. The Next Platform says the third generation chip, AmpereOne-3 as it is calling it, will have 256 cores and be “etched in 3 nanometer (3N to be precise) processes from TSMC”. It will use a modified A2+ core with a “two-chiplet design on the cores, with 128 cores per chiplet. It could be a four-chiplet design with 64 cores per chiplet.” The site expects the AmpereOne-3 will support PCI-Express 6.0 I/O controllers and maybe have a dozen DDR5 memory controllers, although there’s some speculation here. “We have been moving pretty fast on the on the compute side,” Wittich told the site. “This design has got about a lot of other cloud features in it – things around performance management to get the most out of all of those cores. In each of the chip releases, we are going to be making what would generally be considered generational changes in the CPU core. We are adding a lot in every single generation. So you are going to see more performance, a lot more efficiency, a lot more features like security enhancements, which all happen at the microarchitecture level. But we have done a lot to ensure that you get great performance consistency across all of the AmpereOnes. We are also taking a chiplet approach with this 256-core design, which is another step as well. Chiplets are a pretty big part of our overall strategy.” The AmpereOne-3 is reportedly being etched at TSMC right now, prior to its launch next year. More from TechRadar Pro How Ampere Computing plans to ride the AI waveAmpere's new workstation could bring in a whole new dawn for developersPlucky CPU maker beats AMD and Intel to become first to offer 320 cores per server View the full article

-

- chipmakers

- cpus

- (and 5 more)

-

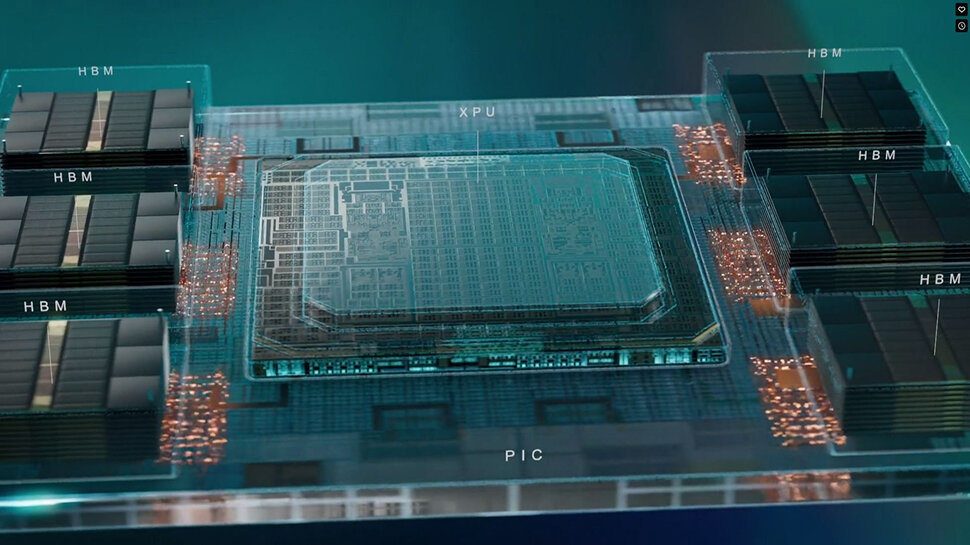

There’s no shortage of startups pushing technology that could one day prove pivotal in AI computing and memory infrastructure. Celestial AI, which recently secured $175 million in Series C funding, is looking to commercialize its Photonic Fabric technology which aims to redefine optical interconnects. Celestial AI's foundational technology is designed to disaggregate AI compute from memory to offer a “transformative leap in AI system performance that is ten years more advanced than existing technologies.” Lower energy overhead and latency The company has reportedly been in talks with several hyperscale customers and a major processor manufacturer, about integrating its technology. Though specific details remain under wraps, that manufacturer is quite likely to be AMD since AMD Ventures is one of Photonic Fabric's backers. As reported by The Next Platform, the core of Celestial AI's strategy lies in its chiplets, interposers, and optical interconnect technology. By combining DDR5 and HBM memory, the company aims to significantly reduce power consumption while maintaining high performance levels. The chiplets can be used for additional memory capacity or as interconnects between chips, offering speeds comparable to NVLink or Infinity Fabric. “The surge in demand for our Photonic Fabric is the product of having the right technology, the right team and the right customer engagement model”, said Dave Lazovsky, Co-Founder and CEO of Celestial AI. “We are experiencing broad customer adoption resulting from our full-stack technology offerings, providing electrical-optical-electrical links that deliver data at the bandwidth, latency, bit error rate (BER) and power required, compatible with the logical protocols of our customer’s AI accelerators and GPUs. Deep strategic collaborations with hyperscale data center customers focused on optimizing system-level Accelerated Computing architectures are a prerequisite for these solutions. We’re excited to be working with the giants of our industry to propel commercialization of the Photonic Fabric.” While Celestial AI faces challenges in timing and competition from other startups in the silicon photonics space, the potential impact of its technology on the AI processing landscape makes it a promising contender. As the industry moves towards co-packaged optics and silicon photonic interposers, Celestial AI's Photonic Fabric could play a key role in shaping the future of AI computing. More from TechRadar Pro GPU prices could spike again as rumors indicate AMD wants to prioritize AINvidia's fastest AI chip ever could cost a rather reasonable $40,000Nvidia plans to compete in $30 billion custom chip market View the full article

-

- nvidia

- celestial ai

-

(and 3 more)

Tagged with:

-

Micron has showcased its colossal 256GB DDR5-8800 MCRDIMM memory modules at the recent Nvidia GTC 2024 conference. The high-capacity, double-height, 20-watt modules are tailored for next-generation AI servers, such as those based on Intel's Xeon Scalable 'Granite Rapid' processors which require substantial memory for training. Tom’s Hardware, which got to see the memory module first hand, and take the photo above, says the company displayed a 'Tall' version of the module at the GTC, but it also intends to offer Standard height MCRDIMMs suitable for 1U servers. Multiplexer Combined Ranks DIMMs Both versions of the 256GB MCRDIMMs are constructed using monolithic 32Gb DDR5 ICs. The Tall module houses 80 DRAM chips on each side, while the Standard module employs 2Hi stacked packages and will run slightly hotter as a result. MCRDIMMs, or Multiplexer Combined Ranks DIMMs, are dual-rank memory modules that employ a specialized buffer to allow both ranks to operate concurrently. As Tom’s Hardware explains, “The buffer allows the two physical ranks to act as if they were two separate modules working in parallel, thereby doubling performance by enabling the simultaneous retrieval of 128 bytes of data from both ranks per clock, effectively doubling the performance of a single module. Meanwhile, the buffer works with its host memory controller using the DDR5 protocol, albeit at speeds beyond those specified by the standard, at 8800 MT/s in this case.“ Customers keen to get their hands on the new memory modules won't have long to wait. In prepared remarks for the company's earnings call last week, Sanjay Mehrotra, chief executive of Micron, said “We [have] started sampling our 256GB MCRDIMM module, which further enhances performance and increases DRAM content per server.” Micron hasn't announced pricing yet, but the cost per module is likely to exceed $10,000. More from TechRadar Pro Computer RAM gets biggest upgrade in 25 yearsNew candidate for universal memory emerges in race to replace RAMMicron's UFS 4.0 storage promises to make AI run faster on your smartphone View the full article

-

Forum Statistics

73.8k

Total Topics71.7k

Total Posts

.png.6dd3056f38e93712a18d153891e8e0fc.png.1dbd1e5f05de09e66333e631e3342b83.png.933f4dc78ef5a5d2971934bd41ead8a1.png)