Search the Community

Showing results for tags 'startups'.

-

The 2024 Snowflake Startup Challenge began with over 900 applications from startups Powered by Snowflake in more than 100 countries. Our judges narrowed that long list of contenders down to 10, and after much deliberation, they’ve now pared it down to the final three. We are pleased to announce that BigGeo, Scientific Financial Systems and SignalFlare.ai by Extropy360 will advance to the Snowflake Startup Challenge finale and compete for the opportunity to receive a share of up to $1 million in investments from Snowflake Ventures, plus exclusive mentorship and visibility opportunities from NYSE. Many thanks to the other semifinalists for their dedication and the effort they put into their presentations during the previous round of competition. Let’s get to know the 2024 finalists. BigGeo Crunching vast amounts of geospatial data is an intimidating, resource-consuming task. But the potential rewards are so rich — whether it is mapping the spread of diseases; determining optimum places for new housing developments; creating more efficient travel and shipping routes; and yes, providing more accurate and timely weather and traffic reports. BigGeo is looking to remove the intimidation factor and give companies immediate, interactive geospatial insights. “With our technology, clients can execute fast and effective geospatial queries, integrate seamlessly with Snowpark Container Services and significantly improve data visualization,” says Brent Lane, Co-Founder and CEO of BigGeo. “This makes geospatial insights more accessible and actionable than ever before, empowering organizations to make informed decisions quickly.” BigGeo’s mission is to convert the theoretical advancements uncovered during the founders’ 15 years of research into practical, market-ready solutions. Its Volumetric and Surface-Level Discrete Global Grid System (DGGS), which manages surface-level, subsurface and aerial data, supports the integration of diverse data forms, including 2D and 3D data, and facilitates dynamic interactions with spatial data. The containerized deployment within Snowflake Native Apps allows interoperability across various data sets and enables secure, governed geospatial AI. The ability to handle large volumes of real-time geospatial data and meet customers’ complex analysis demands is a particular point of pride for the BigGeo team. One of their customers, a major data supplier, used the solution to stream near real-time visualizations of a massive 150 million polygon data set at sub-second speeds, surpassing the capabilities of competing solutions. By directly connecting the visualization layer to the data supplier’s data warehouse, BigGeo enabled informed decision-making directly through the map. “This accolade has definitely energized the entire BigGeo team to continue developing solutions that address real-world challenges, drive significant industry change, and build environmentally conscious solutions that align with our vision for a greener future,” says Brett Jones, Co-Founder and President of BigGeo. The team is excited to present at the Startup Challenge finale. Not only is it an opportunity to expand BigGeo’s network and sharpen its competitive edge, but the team hopes to gain valuable insights from top-tier leaders. “We are very excited to meet Lynn Martin, President of NYSE Group. She has a profound understanding of technology’s crucial role in data integration, transformation and management — areas central to our work,” says Lane. “Her passion for AI and its role in enhancing data services also aligns with what we do at BigGeo.” Scientific Financial Systems Beating the market is the driving force for investment management firms. In today’s markets, that often means making quick calculations over vast volumes of data to locate those scarce alpha opportunities. This is a difficult and time-consuming task, one that spurred Scientific Financial Systems (SFS) to develop a new solution: Quotient. Quotient enables financial institutions to rapidly analyze large amounts of data and provide relevant recommendations quickly. Running natively on Snowflake, Quotient uses a novel semantic layer that integrates Python and SQL technologies. For SFS, Snowflake was in the right place at the right time: Quotient embodies the concept of “localized compute” and was an ideal candidate for the Snowflake Native App model, which helps SFS address scalability. “We are thrilled to share our story about building our applications on top of Snowflake and leveraging the power that Snowflake offers in security, performance and scalability,” says Anne Millington, Co-Founder and CEO. “It is very rewarding to be recognized for the innovations we offer.” The structure and power of Quotient give investment managers the tools they need to find increased alpha outperformance. For small investment managers, Quotient data science and ML techniques provide an immediate and incredibly robust quant bench. For large investment firms, SFS provides a framework to streamline and improve data analytics so their teams can spend more time on research. StarMine’s quantitative analytics research team has seen the benefits for itself. The team focuses on developing financial models based on the evaluation of factors that may impact equity performance. The Snowflake Data Cloud provides an ideal environment to evaluate factors by running them against vast amounts of historical data. With the colocation of Quotient compute and StarMine’s data, research that previously took two to three weeks can be completed in one to two days. Plus, StarMine was able to run the factor based on a broad global universe without restriction and see the results before making further customizations to drill into specific equity criteria. With full transparency into the Quotient engine’s calculations, StarMine has confidence in the results. As for the SFS leadership team, they are honing their presentation for the Snowflake Startup Challenge finale and looking forward to making their pitch. Given their focus on investment firms, winning mentorship from NYSE companies would be “a tremendous honor,” says Millington. “Gaining insight into the needs and perspectives of these NYSE-listed companies would offer great value to the SFS team on multiple levels, from product roadmap considerations, technology implications for AI and NLP, operational implications and more. The expertise and experience of learning from real-world examples at the highest echelon of success would be invaluable,” she explains. SignalFlare.ai by Extropy360 “We are beyond excited, and frankly a bit shocked” to be a Snowflake Startup Challenge finalist, says Michael Lukianoff, Founder and CEO of SignalFlare.ai. “The team and I come from years of experience in brick-and-mortar restaurant tech and data — which has never been held in the same regard as e-commerce or social media tech. We feel like this honor is not just a recognition of SignalFlare.ai, but of the industry we represent, where the opportunities are boundless.” Those opportunities are the reason why SignalFlare.ai’s founders created a decision intelligence platform for chain restaurants. Devastated by the impact of COVID-19, the restaurant industry needed to reinvent how it analyzed demand and use data to make better decisions in high-impact areas, like pricing, promotion, menus and new market opportunities. SignalFlare.ai built new methods, tapped into new data and created a different tech stack, developing a solution, with Snowflake at its core, that incorporates geospatial data for targeting, along with ML models for pricing optimization and risk simulation. Snowflake’s architecture allows visibility into data transformation strategies and performant cross-customer analytics. The team implements Dynamic Tables to ensure the timeliness of changing source data and filters results specific to target analytics. Streamlit apps assist in monitoring incoming data quality and Snowpark integrating ML models for training and returning inferences to Snowflake for downstream analytics. Authentic Restaurant Brands, a restaurant acquisition fund that is part of SignalFlare’s “innovation circle” of customers — essentially a test group and sounding board for new ideas and products — has become an avid user of SignalFlare. The company started by validating the SignalFlare solution and pricing method on one brand; after seeing the benefits, it added two more and recently added a fourth after an acquisition. “I have worked with many pricing vendors in my career. SignalFlare’s approach is the most thorough and cost-effective I have encountered,” says Jorge Zaidan, Chief Strategy Officer of Authentic Restaurant Brands. Looking ahead to the finale, the SignalFlare team is eager to present and to meet Benoit Dageville, Co-Founder and President of Product at Snowflake, who is part of the Startup Challenge judging panel. “The vision that Benoit brought to life made our vision possible,” says Lukianoff, noting that Snowflake was “life-altering” for people like himself, who are obsessed with data accuracy and usability. “The features being released are constantly making our job easier and more efficient, and creating more opportunities. That is a different experience from any technology partner I’ve experienced.” Next up: Startup Challenge Finale in San Francisco Want to see which of these three startups will take the top prize? Register for Dev Day now to see the live finale and experience all of the developer-centric demos and sessions, discussions, expert Q&As and hands-on labs designed to set you up for AI/ML and app dev success. It’s also never too soon to start thinking about the next Snowflake Startup Challenge: Complete this form to get notified when the 2025 contest opens later this year. The post Meet the 2024 Snowflake Startup Challenge Finalists appeared first on Snowflake. View the full article

-

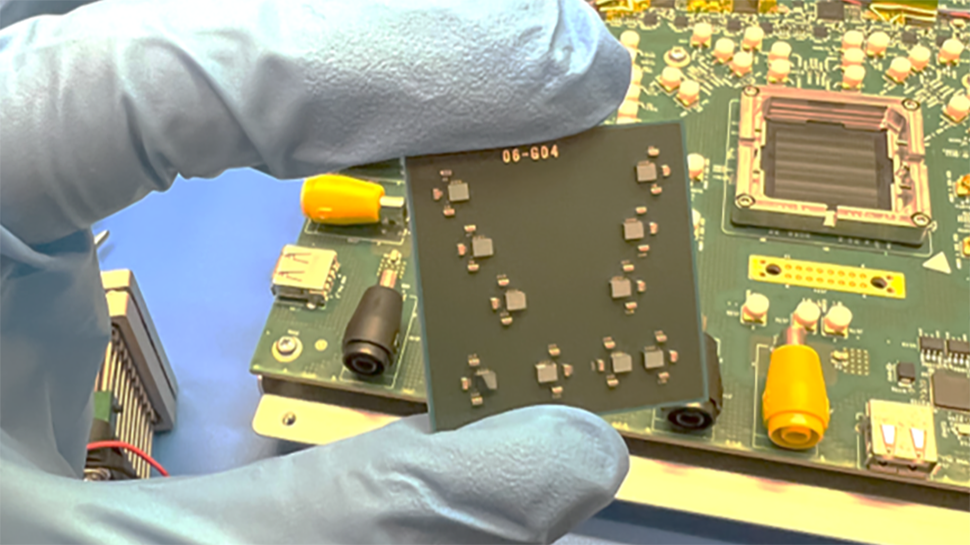

Many system designers are exploring chiplet-based SiPs that move beyond the limitations and costs of huge single-die implementations, but which depend heavily on silicon interposers as substrates for mounting and interconnecting the dies. Silicon interposers deliver a higher data rate than organic substrates, but are not without drawbacks. They are costly, proprietary and limit the number of chiplets that can be placed on one substrate due to size restrictions, while increasing TCO. Many CPUs are multi-die assemblies which make use of organic substrates (implemented using chiplet interconnect standards like UCIe and the Open Compute Project’s Bunch of Wires (BoW)), but they can’t compete performance-wise with silicon interposers. Build a better ‘Blackwell’ Now, Eliyan has come up with what it believes is a viable solution that offers the performance advantages of silicon interposers but without their limitations. The solution to this dilemma, the firm says, is to look at the dies themselves - "at the tiny electronic circuits that drive the interconnect lines”. Most of today’s chiplet interconnect standards use a physical-layer (PHY) IP block, but Eliyan’s NuLink PHY reportedly reaches the same maximum performance levels on organic substrates that alternative PHYs can only achieve on silicon interposers. The company says NuLink PHY, “enables systems with higher performance (more memory) and lower TCO (no interposer),” with results that deliver up to 4x the bandwidth, 4x the power efficiency, up to 4x the SiP size and up to 10x the AI performance. Eliyan’s Co-founder and chief executive officer Ramin Farjadrad recently talked to The Next Platform about how NuLink PHY can be used to “build better, cheaper, and more powerful compute engines than can be done with current packaging techniques based on silicon interposers.” The article, titled “How To Build A Better ‘Blackwell’ GPU Than Nvidia Did”, explains how its technology could be used to significantly improve on Nvidia’s superchip design. It’s a fascinating read, and hints at what could be possible in the future. As The Next Platform’s Timothy Prickett Morgan sums up, “Any memory, any co-packaged optics, any PCI-Express or other controller, can be linked using NuLink to any XPU. At this point, the socket really has become the motherboard.” There’s certainly a lot of interest in Eliyan’s NuLink PHY. The company recently closed a $60 Million Series B funding round, co-led by Samsung Catalyst Fund and Tiger Global Management. “This investment reflects the confidence in our approach to integrating multi-chip architectures that address the critical challenges of high costs, low yield, power consumption, manufacturing complexity, and size limitations,” Farjadrad said, following the funding announcement. More from TechRadar Pro Scientists inch closer to holy grail of memory breakthroughIntel piles pressure on Nvidia with launch of new AI acceleratorThis is what a single 256GB DDR5 memory module looks like View the full article

-

In 2020, Snowflake announced a new global competition to recognize the work of early-stage startups building their apps — and their businesses — on Snowflake, offering up to $250,000 in investment as the top prize. Four years later, the Snowflake Startup Challenge has grown into a premiere showcase for emerging startups, garnering interest from companies in over 100 countries and offering a prize package featuring a portion of up to $1 million in potential investment opportunities and exclusive mentorship and marketing opportunities from NYSE. This year’s entries presented an impressively diverse set of use cases. The list of Top 10 semi-finalists is a perfect example: we have use cases for cybersecurity, gen AI, food safety, restaurant chain pricing, quantitative trading analytics, geospatial data, sales pipeline measurement, marketing tech and healthcare. Just as varied was the list of Snowflake tech that early-stage startups are using to drive their innovative entries. Snowflake Native Apps (generally available on AWS and Azure, private preview on GCP) and Snowpark Container Services (currently in public preview) were exceptionally popular, which speaks to their flexibility, ease of use and business value. In fact, 8 of the 10 startups in our semi-finalist list plan to use one or both of these technologies in their offerings. We saw a lot of interesting AI/ML integrations and capabilities plus the use of Dynamic Tables (currently in public preview), UDFs and stored procedures, Streamlit, and Streamlit in Snowflake. Many entries also used Snowpark, taking advantage of the ability to work in the code they prefer to develop data pipelines, ML models and apps, then execute in Snowflake. Our sincere thanks go out to everyone who participated in this year’s competition. We recognize the amount of work involved in your entries, and we appreciate every submission. Let’s meet the 10 companies competing for the 2024 Snowflake Startup Challenge crown! BigGeo BigGeo accelerates geospatial data processing by optimizing performance and eliminating challenges typically associated with big data. Built atop BigGeo’s proprietary Volumetric and Surface-Level Discrete Global Grid System (DGGS), which manages surface-level, subsurface and aerial data, BigGeo Search allows you to perform geospatial queries against large geospatial data sets and high speeds. Capable of a headless deployment into Snowpark Container Services, BigGeo can be used to speed up queries of data stored in Snowflake, gather those insights into a dashboard, visualize them on a map, and more. Implentio Implentio is a centralized tool that helps ecommerce ops and finance teams efficiently and cost-effectively manage fulfillment and logistics spending. The solution ingests, transforms and centralizes large volumes of operations data from disparate systems and applies AI and ML to deliver advanced optimizations, insights and analyses that help teams improve invoice reconciliation and catch 3PL/freight billing errors. Innova-Q Focusing on food safety and quality, Innova-Q’s Quality Performance Forecast Application delivers near real-time insights into product and manufacturing process performance so companies can assess and address product risks before they affect public safety, operational effectiveness or direct costs. The Innova-Q dashboard provides access to product safety and quality performance data, historical risk data, and analysis results for proactive risk management. Leap Metrics Leap Metrics is a SaaS company that seeks to improve health outcomes for populations with chronic conditions while reducing the cost of care. Their analytics-first approach to healthcare leverages AI-powered insights and workflows through natively integrated data management, analytics and care management solutions. Leap Metrics’ Sevida platform unifies actionable analytics and AI with intelligent workflows tailored for care teams for an intuitive experience. Quilr Quilr’s adaptive protection platform uses AI and the principle of human-centric security to reduce incidents caused by human errors, unintentional insiders and social engineering. It provides proactive assistance to employees before they perform an insecure action, without disrupting business workflow. Quilr also gives organizations visibility into their Human Risk Posture to better understand what risky behaviors their users are performing, and where they have process or control gaps that could result in breaches. Scientific Financial Systems Beating the market is the driving force for investment management firms — but beating the market is not easy. SFS’s Quotient provides a unique set of analytics tools based on data science and ML best practices that rapidly analyzes large amounts of data and enables accurate data calculations at scale, with full transparency into calculation details. Quotient automates data management, time-series operations and production so investment firms can focus on idea generation and building proprietary alpha models to identify market insights and investment opportunities. SignalFlare.ai by Extropy 360 Pricing and analytics for chain restaurants is the primary focus of SignalFlare.ai, a decision intelligence solution that combines ML models for price optimization and risk simulation with geospatial expertise. Restaurants can use SignalFlare to refine and analyze customer and location data so they can better capture price opportunities and drive customer visits. Stellar Stellar is designed to make generative AI easy for Snowflake customers. It deploys gen AI components as containers on Snowpark Container Services, close to the customer’s data. Stellar Launchpad gives customers a conversational way to analyze and synthesize structured and unstructured data to power AI initiatives, making it possible to deploy multiple gen AI apps and virtual assistants to meet the demand for AI-driven business outcomes. Titan Systems Titan helps enterprises to manage, monitor and scale secure access to data in Snowflake with an infrastructure-as-code approach. Titan Core analyzes each change to your Snowflake account and evaluates them against a set of security policies, then rejects changes that are out of compliance to help catch data leaks before they happen. Vector Vector is a relationship intelligence platform that alerts sellers when they can break through the noise by detecting existing relationships between target accounts and happy customers, execs and investors. Vector can infer who knows whom and their connections by analyzing terabytes of contact, business, experience and IP data to determine digital fingerprints, attributes and shared experiences. What’s next: Preparing the perfect pitch In Round 2, each of these semi-finalists will create an investor pitch video, and their leadership team will be interviewed by the judges to discuss the company’s entry, the product and business strategy, and what the company would do with an investment should it win the 2024 Snowflake Startup Challenge. Based on this information, the judges will select three finalists, to be announced in May. Those three companies will present to our esteemed judging panel — Benoit Dageville, Snowflake Co-Founder and President of Product; Denise Persson, Snowflake CMO; Lynn Martin, NYSE Group President; and Brad Gerstner, Altimeter Founder and CEO — during the Startup Challenge Finale at Dev Day in San Francisco on June 6. The judges will ask questions and deliberate live before naming the 2024 Grand Prize winner. Register for Dev Day now to see the live finale and experience all of the developer-centric demos and sessions, discussions, expert Q&As and hands-on labs designed to set you up for AI/ML and app dev success. Congratulations to all of the semi-finalists, and best of luck in the next round! The post Snowflake Startup Challenge 2024: Announcing the 10 Semi-Finalists appeared first on Snowflake. View the full article

-

According to Israeli startup NeuReality, many AI possibilities aren't fully realized due to the cost and complexity of building and scaling AI systems. Current solutions are not optimized for inference and rely on general-purpose CPUs, which were not designed for AI. Moreover, CPU-centric architectures necessitate multiple hardware components, resulting in underutilized Deep Learning Accelerators (DLAs) due to CPU bottlenecks. NeuReality's answer to this problem is the NR1AI Inference Solution, a combination of purpose-built software and a unique network addressable inference server-on-a-chip. NeuReality says this will deliver improved performance and scalability at a lower cost alongside reduced power consumption. An express lane for large AI pipelines “Our disruptive AI Inference technology is unbound by conventional CPUs, GPUs, and NICs," said NeuReality’s CEO Moshe Tanach. "We didn’t try to just improve an already flawed system. Instead, we unpacked and redefined the ideal AI Inference system from top to bottom and end to end, to deliver breakthrough performance, cost savings, and energy efficiency." The key to NeuReality's solution is a Network Addressable Processing Unit (NAPU), a new architecture design that leverages the power of DLAs. The NeuReality NR1, a network addressable inference Server-on-a-Chip, has an embedded Neural Network Engine and a NAPU. This new architecture enables inference through hardware with AI-over-Fabric, an AI hypervisor, and AI-pipeline offload. The company has two products that utilize its Server-on-a-Chip: the NR1-M AI Inference Module and the NR1-S AI Inference Appliance. The former is a Full-Height, Double-wide PCIe card that contains one NR1 NAPU system-on-a-chip and a network-addressable Inference Server that can connect to an external DLA. The latter is an AI-centric inference server containing NR1-M modules with the NR1 NAPU. NeuReality claims the server “lowers cost and power performance by up to 50X but doesn’t require IT to implement for end users.” “Investing in more and more DLAs, GPUs, LPUs, TPUs… won’t address your core issue of system inefficiency,” said Tanach. “It's akin to installing a faster engine in your car to navigate through traffic congestion and dead ends - it simply won't get you to your destination any faster. NeuReality, on the other hand, provides an express lane for large AI pipelines, seamlessly routing tasks to purpose-built AI devices and swiftly delivering responses to your customers, while conserving both resources and capital.” NeuReality recently secured $20 million in funding from the European Innovation Council (EIC) Fund, Varana Capital, Cleveland Avenue, XT Hi-Tech and OurCrowd. More from TechRadar Pro Startup aims to boost LLM performance using standard memoryAI startup making GPU training obsolete with extraordinary piece of techAI chip built using ancient Samsung tech as fast as Nvidia A100 GPU View the full article

-

MemVerge, a provider of software designed to accelerate and optimize data-intensive applications, has partnered with Micron to boost the performance of LLMs using Compute Express Link (CXL) technology. The company's Memory Machine software uses CXL to reduce idle time in GPUs caused by memory loading. The technology was demonstrated at Micron’s booth at Nvidia GTC 2024 and Charles Fan, CEO and Co-founder of MemVerge said, “Scaling LLM performance cost-effectively means keeping the GPUs fed with data. Our demo at GTC demonstrates that pools of tiered memory not only drive performance higher but also maximize the utilization of precious GPU resources.” Impressive results The demo utilized a high-throughput FlexGen generation engine and an OPT-66B large language model. This was performed on a Supermicro Petascale Server, equipped with an AMD Genoa CPU, Nvidia A10 GPU, Micron DDR5-4800 DIMMs, CZ120 CXL memory modules, and MemVerge Memory Machine X intelligent tiering software. The demo contrasted the performance of a job running on an A10 GPU with 24GB of GDDR6 memory, and data fed from 8x 32GB Micron DRAM, against the same job running on the Supermicro server fitted with Micron CZ120 CXL 24GB memory expander and the MemVerge software. The FlexGen benchmark, using tiered memory, completed tasks in under half the time of traditional NVMe storage methods. Additionally, GPU utilization jumped from 51.8% to 91.8%, reportedly as a result of MemVerge Memory Machine X software's transparent data tiering across GPU, CPU, and CXL memory. Raj Narasimhan, senior vice president and general manager of Micron’s Compute and Networking Business Unit, said “Through our collaboration with MemVerge, Micron is able to demonstrate the substantial benefits of CXL memory modules to improve effective GPU throughput for AI applications resulting in faster time to insights for customers. Micron’s innovations across the memory portfolio provide compute with the necessary memory capacity and bandwidth to scale AI use cases from cloud to the edge.” However, experts remain skeptical about the claims. Blocks and Files pointed out that the Nvidia A10 GPU uses GDDR6 memory, which is not HBM. A MemVerge spokesperson responded to this point, and others that the site raised, stating, “Our solution does have the same effect on the other GPUs with HBM. Between Flexgen’s memory offloading capabilities and Memory Machine X’s memory tiering capabilities, the solution is managing the entire memory hierarchy that includes GPU, CPU and CXL memory modules.” (Image credit: MemVerge) More from TechRadar Pro Are we exaggerating AI capabilities?'The fastest AI chip in the world': Gigantic AI CPU has almost one million coresAI chip built using ancient Samsung tech is claimed to be as fast as Nvidia A100 GPU View the full article

-

In today's fast-paced business environment, startups need to leverage the power of the cloud to achieve scale, performance, and consistency for their apps. Google Cloud provides three popular cloud databases that enable reliable PostgreSQL: Spanner, AlloyDB and Cloud SQL. In this article, we will explore the features and benefits of these databases, focusing on AlloyDB and Spanner and how startups can use them — together or separately — to simplify infrastructure, reduce operational costs, and maximize performance. Spanner: a scalable and globally distributed database Spanner is a fully managed database for both relational and non-relational workloads that is designed to scale horizontally across multiple regions and continents. Combining strong consistency, high availability, and low latency, it stands as the ideal solution for mission-critical applications demanding high throughput and rapid response times. Spanner provides a PostgreSQL interface, ensuring your schemas and queries are portable to other environments within or outside of Google Cloud. This also allows developers to leverage many of the tools and techniques they already know, flattening the learning curve when transitioning to Spanner. One of the key features of Spanner is its ability to replicate data across multiple regions while maintaining strong, ACID (atomicity, consistency, isolation, durability) transactions and a familiar SQL interface. On top of that, Spanner offers schema changes without downtime, fully automatic data replication, and data redundancy. As a result, developers can build applications that operate seamlessly across multiple regions without worrying about data consistency issues, regional failures, or planned maintenance. When is Spanner the right fit? Spanner also offers automatic horizontal scaling, from an inexpensive slice of one compute node to thousands of nodes (see in graph below), making it easy for a startup to increase or decrease their query and data capacity based on their workload demands. Spanner allows you to resize elastically without downtime or other disruption, so you can better align your usage with the workload. As a result, startups save money by paying only for the resources needed. In contrast to legacy scale-up databases, changing capacity typically involves 1) standing up new infrastructure, 2) migrating the schema and all of the data, and 3) a big-bang cutover in coordination with downstream applications. Spanner allows you to adjust capacity — read and write — on the fly with no downtime. A built-in managed autoscaler adjusts the capacity for you based on signals, such as CPU usage. Spanner scales linearly from tiny workloads—100 processing units, the equivalent of 0.1 node, and 400GB of data—to thousands of nodes, handling PB of data and millions of queries per second. Recent improvements have raised the storage capacity to 10TB per node and increased the throughput by 50%. For example, Niantic runs 5,000 node instances handling the traffic for Pokémon GO. This elasticity saves you money, reduces risks, and provides scale insurance. Even if you aren’t there today, rest assured you can grow to Niantic or Gmail-sized workloads without disruptive re-architecture with Spanner. Start small and scale with Spanner AlloyDB: A cloud-native and managed PostgreSQL database Google Cloud AlloyDB for PostgreSQL is a fully managed, PostgreSQL-compatible database service that's designed for your most demanding workloads, including 1) transactional, 2) analytical, and 3) hybrid transactional and analytical processing (HTAP). In Google’s performance tests, AlloyDB delivers up to 100X faster analytical queries than standard PostgreSQL and is more than 2X faster than Amazon’s comparable PostgreSQL-compatible service for transactional workloads. AlloyDB also offers a number of features designed to simplify application development. For example, it supports standard PostgreSQL syntax and extensions, making it easy to write queries and manipulate data. Another important consideration: AlloyDB may be the better choice if you’re planning to build GenAI apps, thanks to AlloyDB AI, a built-in set of capabilities for working with vectors, models, and data. AlloyDB uses columnar storage for its columnar engine, which is designed to accelerate analytical queries. The columnar engine stores frequently queried data in an in-memory, columnar format, which can significantly improve the performance of these queries. Intelligent, workload-aware dynamic data organization leverages both row-based and column-based formats. Multiple layers of cache ensure excellent price-performance. Choosing the right database for your startup When it comes to choosing the right database for your startup, there are several factors to consider. First and foremost, you need to consider your application's requirements in terms of performance, availability, global consistency, and scalability. Are you building a consumer app for millions of concurrent users? Maybe a corporate app that will be used for real-time analytics? Each database has its own strengths. Feature AlloyDB Spanner Type Cloud-native, managed PostgreSQL database Globally distributed scalable database Supported engines PostgreSQL PostgreSQL, GoogleSQL Security Data encryption at rest and in transit Data encryption at rest and in transit Data residency Single region by default, multi-region available Multi-region by default Best for Hybrid transactional & analytical workloads, AI applications Mission-critical apps with high data consistency & global reach (multi-writer across regions) Spanner is the ideal choice for mission-critical applications that demand high scalability, unwavering consistency, and 99.999% SLA availability. Teams building applications that are evaluating sharding or active-active configurations, to work around scaling limitations can benefit from Spanner’s built-in, hands-free operations. Spanner enables development teams with its familiar SQL interface (including PostgreSQL dialect support) for seamless large-scale data processing. This ensures portability, flexibility, and simplifies use cases requiring high write scaling, global consistency, and adaptability to variable traffic. AlloyDB is a good choice for applications that need a high-performance, reliable, and scalable database with built-in support for advanced analytics and full PostgreSQL compatibility. AlloyDB supports real-time analytics applications because of its automatic data placement across tiers (e.g., buffer cache, ultra-fast cache, and block storage), and its ability to process up to 64 TiB of data per cluster in real time. AlloyDB is also reliable and offers a 99.99% SLA, including maintenance. Another option to consider is Cloud SQL, an enterprise-ready, fully managed relational database service that offers PostgreSQL, MySQL, and SQL Server engines. It is user-friendly as it provides a straightforward user interface with the familiar SQL interface with PostgreSQL, MySQL and SQL Server for easy interaction and only takes minutes to get your database up and running. Additionally, another important factor to keep in mind is your team's expertise and familiarity with different database technologies. If your team is already familiar with relational databases and the Google Cloud ecosystem, then Spanner may be the easier choice. If your team is more comfortable with PostgreSQL, then AlloyDB may be the better fit. Conclusion In conclusion, Spanner and AlloyDB are two powerful databases that offer different benefits and features for startups and can be used together or separately, depending on your needs. Together, AlloyDB and Spanner are a dynamic duo with which you can achieve performance and scalability based on Google’s innovations, delivering both responsive user interactions and robust, scalable back-end functionalities. With PostgreSQL and Google Cloud as the unifying threads, both services can co-exist seamlessly, forming a powerful combination for any application demanding high performance and unwavering reliability. For example, Character.ai uses AlloyDB and Spanner together in the same app that is at core of their business: AlloyDB for powering the interactive experience: At the user-facing front-end, AlloyDB shines as the engine behind quick, responsive interactions. Its unparalleled speed and performance ensure a smooth and intuitive user experience, critical for engaging with the AI model. Spanner as the backbone of history and workflow: Behind the scenes, Spanner maintains the complete history and workflow data integral to the AI integration. Its unshakeable scale and availability guarantee seamless data management, regardless of load or complexity. Both Spanner and AlloyDB operate within the familiar PostgreSQL ecosystem, offering a consistent and unified development experience. This empowers developers to leverage their existing skills and knowledge, accelerating integration and workflow. Additionally, the Google Cloud Platform provides a robust and secure environment for both services, ensuring seamless data management and operational efficiency. View the full article

-

Singapore's Fastest Growing Companies 2024 - KodeKloud Today, we've some incredible news to share with you, our KodeKloud family, and the wider tech community. The Straits Times honored KodeKloud by naming us the 4th fastest-growing startup in Singapore! And the Financial Times honored us as the 14th fastest-growing startup in APAC! This recognition is not just a milestone but a moment of reflection on our journey, values, and the future we’re building together. Our Humble BeginningsWhen we started KodeKloud, our mission was clear: to create high quality engineers. We believed that high-quality tech education should not be a privilege but a right accessible to everyone. For just a few hundred dollars a month we provide high-quality hands-on education to everyone anywhere in the world. We embarked on a mission to create an educational platform where quality videos and labs were available to all, aspiring to cultivate a community of skilled and confident engineers. 0:00 /0:54 1× Bootstrapped and ProudOur growth story is unusual but inspiring. As a bootstrapped startup, we've relied on our innovative spirit and a lean business model to navigate the tech education landscape. We prioritized sustainable growth and profitability over chasing venture capital, which allowed us to remain focused on our mission and values. Ivan receiving the award for the Top Engineer from our CTO Vijin PalazhiGrowth Fueled by Passion and Word of MouthPerhaps the most remarkable aspect of our journey has been our growth strategy—or, more accurately, the organic growth that has come from our community's passion for what we do. We've never leaned heavily on big marketing spends. Instead, we've grown through word of mouth, a testament to our users' love and belief in our mission. Our community's enthusiasm and feedback have been our guiding lights, pushing us to innovate and improve continuously. Our "people" people - Cadell and Poonam - in actionA Team Like No OtherNone of this would have been possible without our extraordinary team. The 70+ engineers at KodeKloud have been the real heroes behind this success story. Their dedication, creativity, and passion have turned our shared vision into a reality. This recognition belongs to every single team member who has contributed their best work, day in and day out. A Team Like No Other!Looking AheadBeing named the 4th fastest-growing startup in Singapore is not the finish line for us; it's a new beginning. It validates our approach and fuels our ambition to reach even greater heights. Our commitment to making technology education accessible and affordable for everyone remains unwavering. Enjoying the not-so-boring team talksWe are incredibly grateful to our community for believing in us and joining us on this journey. Your support and feedback inspire us to keep pushing the boundaries of what's possible in tech education. Here’s to more learning, growth, and milestones together. The future is bright, and at KodeKloud, we're just getting started. View the full article

-

A startup has emerged from stealth mode, heralding its development and fabrication of a prototype superconducting processor for AI. Extropic says it can create AI accelerators that are 'many orders of magnitude faster and more energy efficient than digital processors (CPUs/GPUs/TPUs/FPGAs).' View the full article

-

Qumulo has launched Azure Native Qumulo Cold (ANQ Cold), which it claims is the first truly cloud-native, fully managed SaaS solution for storing and retrieving infrequently accessed “cold” file data. Fully POSIX-compliant and positioned as an on-premises alternative to tape storage, ANQ Cold can be used as a standalone file service, a backup target for any file store, including on-premises legacy scale-out NAS, and it can be integrated into a hybrid storage infrastructure, enabling access to remote data as if it were local. It can also scale to an exabyte-level file system in a single namespace. “ANQ Cold is an industry game changer for economically storing and retrieving cold file data,” said Ryan Farris, VP of Product at Qumulo. “To put this in perspective with a common use case, hospital IT administrators in charge of PACS archival data can use ANQ Cold for the long-term retention of DICOM images at a fraction of their current on-premises legacy NAS costs, while still being able to instantly retrieve over 200,000 DICOM images per month without extra data retrieval charges common to native cloud services.” Insurance against ransomware ANQ Cold also provides a robust, secure copy of critical data as insurance against ransomware attacks. Kiran Bhageshpur, CTO at Qumulo said. “In combination with our cryptographically signed snapshots, customers can create an instantly accessible “daily golden” copy of their on-premises NAS data, Qumulo or legacy scale-out NAS storage. There is simply no other solution that is as affordable on an on-going basis while also allowing customers to recover to a known good state and resume operations as quickly as with ANQ Cold.” The service is priced at from $9.95 per TB per month (depending on where in the world you are). Customers receive 5TB of data retrieval each month, with additional retrieval charged at $0.03/GB. The minimum data limit is for 250TB a month, however, with minimum billable amount of $2487.50 per month. There is also a minimum 120-day retention period. You can start a free trial today. More from TechRadar Pro These are the best free cloud storage servicesGoogle One is hiding one of its best-value cloud storage plansBox gets even smarter with Azure OpenAI Service integration View the full article

-

Welcome to Snowflake’s Startup Spotlight, where we learn about companies building their businesses on Snowflake. In this edition, we’ll hear how Maria Marti, founder and CEO of ZeroError, used her experiences as an engineer and an executive to build a team and create the AI analytics assistant she always wanted — but never had. What inspires you as a founder? My team and my customers. The passion that my team puts into everything we do, and the looks in our customers’ eyes when they see what ZeroError can do for them — how it solves a real issue for them. Explain ZeroError in one sentence. ZeroError is an enterprise AI platform designed to detect errors and fraud in data. What problem does ZeroError aim to solve? How did you identify that issue? There are two critical moments when you are on a data-driven team: one, when you need to make decisions with data you received; and two, when data needs to leave the organization. There is no room for mistakes. When you are presenting to the Board, or talking with regulators, your data has to be perfect. Today, we solve this the same way we did 20 years ago — with a lot of manual processes. Current data catalogs and quality tools can take a long time to implement. The user needs to pre-define almost everything, and the tools generally don’t have the flexibility to adapt to a very dynamic data environment. That’s why we created ZeroError. ZeroError is the application that I needed in my past roles as an executive in Fortune 100 companies, but it did not exist. ZeroError applies the power of AI to help improve data quality and analytics. Our proprietary AI detects complex data anomalies without human input and without defining rules. It is an executive-centric application for critical and timely decision-making, reporting and controls. As a founder and innovator, what is your take on the rapidly changing AI landscape? We are so fortunate to live in this exciting time. I truly believe that AI is not here to replace humans, but to help us achieve more and make us more efficient. At our heart, we are an AI company. ZeroError is your AI assistant for analytics, and I think that’s super cool. We developed our proprietary AI and integrated other LLMs into our offering. We’re launching AI copilots focused on specific business solutions and customer needs. Our approach is different because we have a unique combination of knowing the issue we are addressing inside and out, the team’s years of experience as agents of change in large organizations, our superb tech skills, and loving what we are doing. Passion is everything! How is the AI surge changing what businesses expect from their platforms and tools? Expectations are definitely very high. In the case of ZeroError, I would say that businesses are surprised by what we can achieve with AI — but at the same time, they expect to be surprised. Many AI applications, especially those focused on business intelligence, “assume” that the data they receive is perfect. That is a huge assumption. Any good BI needs good data; you have to understand and trust your data quality to be able to trust the output of your apps. During Mobile World Congress 2024 in Barcelona, we got so much attention and traction because ZeroError is a platform that tells the user if they can trust the data. At the company’s Mobile World Congress 2024 booth, ZeroError Founder and CEO Maria Marti saw firsthand how businesses have high expectations for AI applications. How do you leverage Snowflake to push the envelope in your industry? Working with Snowflake has been a critical building block. Snowflake makes our business lives easier, allowing us to focus on what we are really good at. The Snowflake platform is tremendously intuitive, and gives us the flexibility to grow and to structure our Snowflake instance in a way that fits our changing needs. For a startup, time is everything: you need to go fast, iterate and continue to innovate. Snowflake has been key in accelerating our time to market. The cost transparency is critical for any startup, and we share the common passion of putting customers at the center of our businesses. What’s the most valuable piece of advice you got about how to run a startup? Always be true to yourself and remember why you created the company, especially in the difficult moments. That came from my father, who has been an entrepreneur all his life. What’s a lesson you learned the hard way? All my life I took mistakes as an opportunity to learn. I think that not taking risks is the biggest risk you can take. And of course if you try new things, you are going to make mistakes, but that is an opportunity to improve and to get better. I always tell my teams and partners: It is when something does not work that the true character of the partnership comes up and we have an opportunity to differentiate ourselves and become stronger as a team. Learn more about ZeroError at www.zeroerror.ai. If you’re a startup building on Snowflake, check out the Powered by Snowflake Startup Program for info on how Snowflake can support your startup goals. The post Snowflake Startup Spotlight: ZeroError appeared first on Snowflake. View the full article

-

Here are 5 trends that startups should keep an eye on ... https://www.snowflake.com/blog/five-trends-changing-startup-ecosystem/

-

- trends

- ecosystems

- (and 6 more)

-

Editor’s note: Here we take a look at how Branch, a fintech startup, built their data platform with BigQuery and other Google Cloud solutions that democratized data for their analysts and scientists. As a startup in the fintech sector, Branch helps redefine the future of work by building innovative, simple-to-use tech solutions. We’re an employer payments platform, helping businesses provide faster pay and fee-free digital banking to their employees. As head of the Behavioral and Data Science team, I was tapped last year to build out Branch’s team and data platform. I brought my enthusiasm for Google Cloud and its easy-to-use solutions to the first day on the job. We chose Google Cloud for ease-of-use, data & savings I had worked with Google Cloud previously, and one of the primary mandates from our CTO was “Google Cloud-first,” with the larger goal of simplifying unnecessary complexity in the system architecture and controlling the costs associated with being on multiple cloud platforms. From the start, Google Cloud’s suite of solutions supported my vision of how to design a data team. There’s no one-size-fits-all approach. It starts with asking questions: what does Branch need? Which stage are we at? Will we be distributed or centralized? But above all, what parameters in the product will need to be optimized with analytics and data science approaches? With team design, product parameterization is critical. With a product-driven company, the data science team can be most effective by tuning a product’s parameters—for example, a recommendation engine for an ecommerce site is driven by algorithms and underlying models that are updating parameters. “Show X to this type of person but Y to this type of person,” X and Y are the parameters optimized by modeling behavioral patterns. Data scientists behind the scenes can run models as to how that engine should work, and determine which changes are needed. By focusing on tuning parameters, the team is designed around determining and optimizing an objective function. That of course relies heavily on the data behind it. How do we label the outcome variable? Is a whole labeling service required? Is it clean data with a pipeline that won’t require a lot of engineering work? What data augmentation will be needed? With that data science team design envisioned, I started by focusing on user behavior—deciding how to monitor and track it, how to partner with the product team to ensure it’s in line with the product objectives, then spinning up A/B testing and monitoring. On the optimization side, transaction monitoring is critical in fintech. We need to look for low-probability events and abnormal patterns in the data, and then take action, either reaching out to the user as quickly as possible to inform them, or stopping the transaction directly. In the design phase, we need to determine if these actions need to be done in real-time or after the fact. Is it useful to the user to have that information in real time? For example, if we are working to encourage engagement, and we miss an event or an interaction, it’s not the end of the world. It’s different with a fraud monitoring system, for which you’ve got to be much more strict about real-time notifications. Our data infrastructure There are many use cases at Branch for data cloud technologies from Google Cloud. One is with “basic” data work. It’s been incredibly easy to use BigQuery, Google’s serverless data warehouse, which is where we’ve replicated all of our SQL databases, and Cloud Scheduler, the fully managed enterprise-grade cron job scheduler. These two tools, working together, make it easy to organize data pipelining. And because of their deep integration, they play well with other Google Cloud solutions like Cloud Composer and Dataform, as well as with services, like Airflow, from other providers. Especially for us as a startup, the whole Google Cloud suite of products accelerates the process of getting established and up and running, so we can perform the “bread-and-butter” work of data science. We also use BigQuery as a holder of heavier stats, and we train our models there, weekly, monthly, nightly, depending on how much data we collect. Then we leverage the messaging and ingestion tool Pub/Sub and its event systems to get the response in real time. We evaluate the output for that model in a Dataproc cluster or Dataform, and run all of that in Python notebooks, which can call out to BigQuery to train a model, or get evaluated and pass the event system through. Full integration of data solutions At the next level, you need to push data out to your internal teams. We are growing and evolving, so I looked for ways to save on costs during this transition. We do a heavy amount of work in Google Sheets because it integrates well with other Google services, getting data and visuals out to the people who need them; enabling them to access raw data and refresh as needed. Google Groups also makes it easy to restrict access to data tables, which is a vital concern in the fintech space. The infrastructure management and integration of Google Groups make it super useful. If an employee departs the organization, we can easily delete or control their level of access. We can add new employees to a group that has a certain level of rights, or read and write access to the underlying databases. As we grow with Google Cloud, I also envision being able to track the user levels, including who’s running which SQLs and who’s straining the database and raising our costs. A streamlined data science team saves costs I’d estimate that Google Cloud’s solutions have saved us the equivalent of one full-time engineer we’d otherwise need to hire to link the various tools together, making sure that they are functional and adding more monitoring. Because of the fully managed features of many of Google Cloud’s products, that work is done for us, and we can focus on expanding our customer products. We’re now 100% Google Cloud for all production systems, having consolidated from IBM, AWS, and other cloud point solutions. For example, Branch is now expanding financial wellness offerings for our customers to encourage better financial behavior through transaction monitoring, forecasting their spend and deposits, and notifying them of risks or anomalies. With those products and others, we’ll be using and benefiting from the speed, scalability, and ease of use of Google Cloud solutions, where they always keep data—and data teams—top of mind. Learn more about Branch. Curious about other use cases for BigQuery? Read how retailers can use BigQuery ML to create demand forecasting models. Related Article Inventory management with BigQuery and Cloud Run Building a simple inventory management system with Cloud Run and BigQuery Read Article

-

Forum Statistics

43.6k

Total Topics43.1k

Total Posts

.png.6dd3056f38e93712a18d153891e8e0fc.png.1dbd1e5f05de09e66333e631e3342b83.png.933f4dc78ef5a5d2971934bd41ead8a1.png)

.thumb.jpg.10b3e13237872a9a7639ecfbc2152517.jpg)