Search the Community

Showing results for tags 'fintech'.

-

Financial technology is developing quickly as it continues to bring change to the financial industry. Learn what it means in this summary.View the full article

-

Editor’s note: Apex FinTech Solutions Inc. ("Apex") enables modern investing and wealth management tools through an ecosystem of frictionless platforms, APIs, and services. As part of its clearing and custody services (provided through Apex Clearing Corporation, a wholly owned subsidiary of Apex Fintech Solutions Inc.), Apex wanted to deliver its fintech clients in the trading and investment sector with more timely and accurate margin calculations. To transform this traditionally on-premises process, the company migrated to AlloyDB for PostgreSQL to enable real-time decision-making and risk management for clients and investors. We know that time is money — especially for investors. In the trading and investment sector, fintech companies are leading the charge as investors demand faster, more secure, and more intuitive experiences. Developing solutions for increasingly tech-savvy clients, however, can take a toll on computing resources. That’s why companies turn to us, Apex FinTech Solutions. Our modular ecosystem of APIs and platforms includes trading solutions that provide the routing for execution exchanges and market makers. To ensure top-notch speed and performance for our margin calculation workloads, we chose AlloyDB for PostgreSQL as our database. Overhauling processes to invest in our successRecently, Apex leadership has been focused on transforming on-premises processes into SaaS-based product offerings. We saw an opportunity for improvement in our margin calculation method — a crucial service for brokerages. Margin is the capital that an investor borrows from a broker to purchase an investment and represents the difference between the investment's value and the amount borrowed. Investors should continually monitor and understand margin requirements as they are required to maintain sufficient equity to hold open positions. The amount of required equity fluctuates based upon portfolio mix and optimization, price movements, and underlying market volatility. It is also important to understand that trading firms have a fixed amount of capital they can allocate to their specific margin obligations at any given time, which may affect the requirements and equity needed to maintain their open positions. In our previous system, we used a Java application with Microsoft SQL Server as its backend to calculate margins as a batch process that provided the start-of-day buying power numbers to our clients and investors. We ran the process in our data center via a database export file, which we chunked up and distributed across several worker nodes to calculate the margins in mini-batches. As part of Apex’s digital transformation and cloud migration, we needed real-time data from APIs to calculate the margin on demand and help our customers to determine their risk in seconds. We knew this capability would provide a strategic advantage for Apex and our customers. AlloyDB — a convincing solutionAlloyDB is a fully managed, PostgreSQL-compatible database solution that provides openness and scalability. As a highly regulated financial institution, total system availability and performance were critical considerations. We quickly adopted AlloyDB with confidence since it was compatible with our high availability (HA) and disaster recovery (DR) requirements right out of the box. Today, a combination of a new microservices architecture and AlloyDB allows us to query directly against the real-time account data stored in the database. The functionality we use includes AlloyDB read replicas, the Google Kubernetes Engine (GKE) cluster autoscaler, a Pub/Sub event bus, and the AlloyDB columnar engine. The most crucial part of the architecture is its ability to separate write and read operations between the AlloyDB primary instance and AlloyDB read replicas. This capability provides the flexibility to segregate transactional and analytical queries, improving performance and scalability. Since AlloyDB uses a disaggregated, shared storage system to store data, we can spin up read replicas on demand without waiting for data to be copied over from the primary instance. When the workload is complete, we can scale down replicas to minimize costs. Moreover, we enabled the AlloyDB columnar engine since margin calculations are an analytical query from the database’s point of view. The columnar engine reduced the total CPU load on the database, saving costs by minimizing the required number of read replicas. A GKE cluster configured for autoscaling based on the number of messages in a Pub/Sub queue allows resources to scale up dynamically — so we can quickly process the entire workload more efficiently and in parallel — and then spin back down once processing is complete. Figure 1: Architecture diagram of modern margin calculation in Google Cloud Crunching the numbers: 50% reduction in processing timeThis new, flexible architecture offers Apex customers the choice between on-demand single account margin calculations via an Apigee API or comprehensive nightly batch jobs for all accounts. This in turn gives us an opportunity to expand our existing service offerings and increase customer value. The AlloyDB-based solution achieved a remarkable 50 percent reduction in processing time, enabling margin calculations for 100,000 accounts in just one minute.1 This is a significant improvement in efficiency and scalability. With the flexibility and speed of AlloyDB, we have significant potential to improve these results with further tuning. We are excited to collaborate with Google Cloud to use AlloyDB in our scalable architecture for batch and real-time margin calculation without the need to move or copy data. We are now exploring the potential to migrate additional traditional PostgreSQL instances and continue driving disruption and innovation for Apex — and the future of financial services. Get startedLearn more about AlloyDB for PostgreSQL and start a free trial today!1. 50% reduction in processing time is based on internal testing and benchmarking conducted in November 2024 using the Google database and services. All product and company names are trademarks ™ or registered ® trademarks of their respective holders. Use of them does not imply any affiliation with or endorsement by them. Apex Fintech Solutions is a fintech powerhouse enabling seamless access, frictionless investing, and investor education for all. Apex’s omni-suite of scalable solutions fuel innovation and evolution for hundreds of today’s market leaders, challengers, change makers, and visionaries. The Company’s digital ecosystem creates an environment where clients with the biggest ideas are empowered to change the world. Apex works to ensure their partners succeed on the frontlines of the industry via bespoke solutions through its Apex Clearing™, Apex Advisor Solutions™, Apex Silver™, and Apex CODA Markets™ brands. Apex Clearing Corporation, a wholly-owned subsidiary of Apex Fintech Solutions Inc., is an SEC registered broker dealer, a member of FINRA and SIPC, and is licensed in 53 states and territories. Securities products and services referenced herein are provided by Apex Clearing Corporation. FINRA BrokerCheck reports for Apex Clearing Corporation are available at: http://www.finra.org/brokercheck Nothing herein shall be construed as a recommendation to buy or sell any security. While we have made every attempt to ensure that the information contained in this document has been obtained from reliable sources, Apex is not responsible for any errors or omissions, or for the results obtained from the use of this information. All information in this document is provided “as is”, with no guarantee of completeness, accuracy, timeliness or of the results obtained due to the use of this information, and without warranty of any kind, express or implied, including but not limited to warranties of performance, merchantability and fitness for a particular purpose. In no event will Apex be liable to you or anyone else for any decision made or taken in reliance on the information in this document or for any consequential, special or similar damages, even if advised of the possibility of such damages. Dissemination of this information is prohibited without Apex’s written permission. View the full article

-

- fintech

- apex fintech

-

(and 2 more)

Tagged with:

-

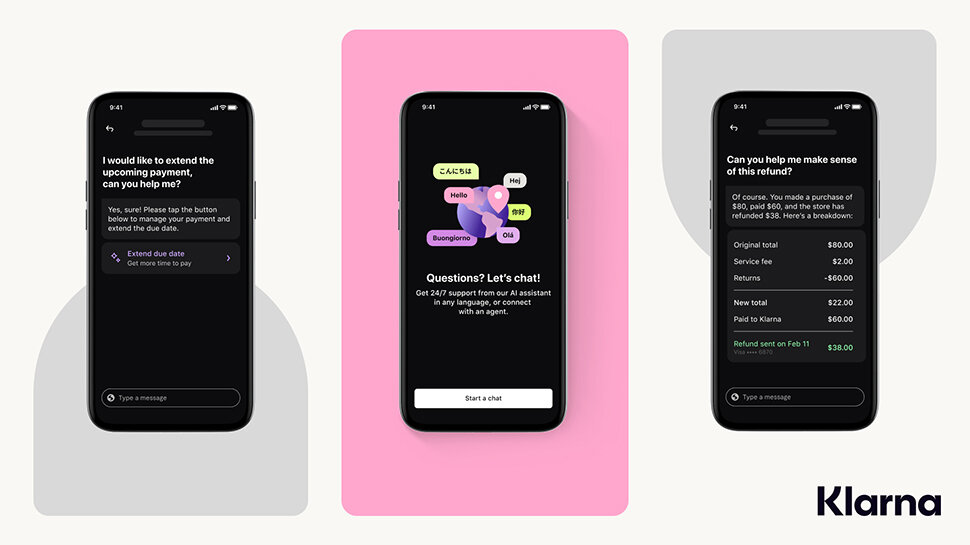

While most people would prefer to chat with real employees when looking to solve a problem to do with a company’s products or services, chatbots are the first and often only port of call these days. They are getting ever smarter too thanks to modern AI - but this does come at a cost, however, as it means fewer human jobs in customer service. For example, take Swedish fintech Klarna. A post on the company’s website announced proudly that its OpenAI-powered Klarna AI assistant had handled two-thirds of customer service chats in its first month. You’ll need some context for that – Klarna says that is 2.3 million conversations. It also says that the AI is now doing the equivalent work of 700 full-time agents and is “on par with human agents in regard to customer satisfaction score”. Klarna also adds that “it is more accurate in errand resolution, leading to a 25% drop in repeat inquiries”, although the decline could be partially attributed to people not wanting to engage with an AI chatbot. No way connected to workforce reductions Speed is a positive factor too. Klarna says customers “resolve their errands in less than 2 mins compared to 11 mins previously" and then there’s the bottom line. The switch to AI has reportedly driven a $40 million profit improvement to Klarna in 2024. “This AI breakthrough in customer interaction means superior experiences for our customers at better prices, more interesting challenges for our employees, and better returns for our investors,” said Sebastian Siemiatkowski, co-founder and CEO of Klarna. “We are incredibly excited about this launch, but it also underscores the profound impact on society that AI will have. We want to reemphasize and encourage society and politicians to consider this carefully and believe a considerate, informed and steady stewardship will be critical to navigate through this transformation of our societies." That the “AI is now doing the equivalent work of 700 full-time agents” will raise some eyebrows, especially given that the company laid off approximately the same number of employees in 2022 due to inflation and economic uncertainty. Despite the similarities in numbers, Klarna says there is no relation between the two. When Fast Company asked the company about it, it was told: “This is in no way connected to the workforce reductions in May 2022, and making that conclusion would be incorrect. We chose to share the figure of 700 to indicate the more long-term consequences of AI technology, where we believe it is important to be transparent in order to create an understanding in society. We think [it’s] important to proactively address these issues and encourage a thoughtful discussion around how society can meet and navigate this transformation.” More from TechRadar Pro These are the best UK job sitesIt seems that GenAI is great at some jobs and terrible at othersCould AI actually lead to more human jobs at your business? View the full article

-

It is often said that a journey of a thousand miles begins with a single step. 10 years ago, building a data technology stack felt a lot more like a thousand miles than it does today; technology, automation, and business understanding of the value of data have significantly improved. Instead, the problem today is knowing how to take the first step. Figure: PrimaryBid Overview PrimaryBid is a regulated capital markets technology platform connecting public companies to their communities during fundraisings. But choosing data technologies presented a challenge as our requirements had several layers: PrimaryBid facilitates novel access for retail investors to short-dated fundraising deals in the public equity and debt markets. As such, we need a platform that can be elastic to market conditions.PrimaryBid operates in a heavily regulated environment, so our data stack must comply with all applicable requirements.PrimaryBid handles many types of sensitive data, making information security a critical requirement.PrimaryBid’s data assets are highly proprietary; to make the most of this competitive advantage, we needed a scalable, collaborative AI environment.As a business with international ambitions, the technologies we pick have to scale exponentially, and be globally available.And, perhaps the biggest cliche, we needed all of the above for as low a cost as possible.Over the last 12 or so months, we built a lean, secure, low-cost solution to the challenges above, partnering with vendors that are a great fit for us; we have been hugely impressed by the quality of tech available to data teams now, compared with only a few years ago. We built an end-to-end unified Data and AI Platform. In this blog, we will describe some of the decision-making mechanisms together with some of our architectural choices. The 30,000 foot viewThe 30,000 foot view of PrimaryBid’s environment will not surprise any data professional. We gather data from various sources, structure it into something useful, surface it in a variety of ways, and combine it together into models. Throughout this process, we monitor data quality, ensure data privacy, and send alerts to our team when things break. Figure: High Level summary of our data stack Data gathering and transformationFor getting raw data into our data platform, we wanted technology partners whose solutions were low-code, fast, and scalable. For this purpose, we chose a combination of Fivetran and dbt to meet our needs. Fivetran supports a huge range of pre-built data connectors, which allow data teams to land new feeds in a matter of minutes. The cost model we have adopted is based on monthly ‘active’ rows, i.e., we only pay for what we use. Fivetran also takes care of connector maintenance, freeing up massive amounts of engineering time by outsourcing the perpetual cycle of updating API integrations. Once the data is extracted, dbt turns raw data into a usable structure for downstream tools, a process known as analytics engineering. dbt and Fivetran make a synergistic partnership, with many Fivetran connectors having dbt templates available off the shelf. dbt is hugely popular with data engineers, and contains many best practices from software development that ensure analytics transformations are robust. Both platforms have their own orchestration tools for pipeline scheduling and monitoring, but we deploy Apache Airflow 2.0, managed via Google Cloud’s Cloud Composer, for finer-grained control. Data storage, governance, and privacyThis is the point in our data stack where Google Cloud starts to solve a whole variety of our needs. We start with Google Cloud’s BigQuery. BigQuery is highly scalable, serverless, and separates compute costs from storage costs, allowing us only to pay for exactly what we need at any given time. Beyond that though, what sold us the BigQuery ecosystem was the integration of the data and model privacy, governance and lineage throughout. Leveraging Google Cloud’s Dataplex, we set security policies in one place, on the raw data itself. As the data is transformed and passed between services, these same security policies are adhered to throughout. One example is PII, which is locked away from every employee bar a necessary few. We tag data one time with a ‘has_PII’ flag, and it doesn’t matter what tool you are using to access the data, if you do not have permission to PII in the raw data you will never be able to see it anywhere. Figure: Unified governance using Dataplex Data analyticsWe chose Looker for our self-service and business intelligence (BI) platform based on three key factors: Instead of storing data itself, Looker writes SQL queries directly against your data warehouse. To ensure it writes the right query, engineers and analysts build Looker analytics models using ‘LookML’. LookML for the most part is low-code, but for complex transformations, SQL can be written directly into the model, which plays to our team's strong experience with SQL. In this instance we store the data in BigQuery and access through Looker.Being able to extend Looker into our platforms was a core decision factor. With the LookML models in place, transformed, clean data can be passed to any downstream service.Finally, the interplay between Looker and Dataplex is particularly powerful. Behind the scenes, Looker is writing queries against BigQuery. As it does so, all rules around data security and privacy are preserved.There is much more to say about the benefits we found using Looker; we look forward to discussing these in a future blog post. AI and machine learningThe last step in our data pipelines is our AI/ML environment. Here, we have leaned even further into Google Cloud’s offerings, and decided to use Vertex AI for model development, deployment, and monitoring. To make model building as flexible as possible, we use the open-source Kubeflow framework within Vertex AI Pipeline environment for pipeline orchestration; this framework decomposes each step of the model building process into components, each of which performs a fully self-contained task, and then passes metadata and model artifacts to the next component in the pipeline. The result is highly adaptable and visible ML pipelines, where individual elements can be upgraded or debugged independently without affecting the rest of the code base. Figure: Vertex AI Platform Finishing touchesWith this key functionality set up, we’ve added a few more components to add even more functionality and resilience to the stack: Real-ime pipelines: running alongside our FiveTran ingestion, we added a lightweight pipeline that brings in core transactional data in real time. This leverages a combination of managed Google Cloud services, namely Pub/Sub and Dataflow, and adds both speed and resilience to our most important data feeds.Reverse ETL: Leveraging a CDP partner, we write analytics attributes about our customers back into our customer relationship management tools, to ensure we can build relevant audiences for marketing and service communications.Generative AI: following the huge increase in available gen AI technologies, we’ve built several internal applications that leverage Google’s PaLM 2. We are working to build an external application too — watch this space!So there you have it, a whistle-stop tour of a data stack. We’re thrilled with the choices we’ve made, and are getting great feedback from the business. We hope you found this useful and look forward to covering how we use Looker for BI in our organization. Special thanks to the PrimaryBid Data Team, as well as Stathis Onasoglou and Dave Elliott for their input prior to publication. Related Article Data analytics in the age of AI: How we’ve enhanced our data platforms this year Round-up of data analytics innovations in 2023 for the era of AI. Read Article View the full article

-

The fintech landscape is ever-evolving, driven by tech advancements, regulatory changes, and shifts in consumer behaviorView the full article

-

Fintech companies may reinforce their defenses and safeguard customer data by implementing extensive security protocolsView the full article

-

From artificial intelligence to 5G, 2024 could be a pivotal year for the tech industry and workers in the U.K.View the full article

-

- trends

- predictions

-

(and 3 more)

Tagged with:

-

Financial services are transforming digitally, with most people using online banking or mobile apps to save time and money. View the full article

-

5W Public Relations, one of the largest independently owned PR firms in the U.S., View the full article

-

25+ sessions (and more to be announced) showcasing recent developments and the direction of open source in financial services and providing unique opportunities to hear from and engage with those who are leveraging open source software to solve industry challenges. SAN FRANCISCO, October 13, 2022 — The Linux Foundation, the nonprofit organization enabling mass innovation through open source… Source The post The Linux Foundation and Fintech Open Source Foundation Announce the Conference Schedule for Open Source in Finance Forum New York 2022 appeared first on Linux.com. View the full article

-

- linux foundation

- linux

-

(and 2 more)

Tagged with:

-

The integration of FinTech continues to revolutionize the way businesses interact with their consumers. What started as a viable solution to eliminating the need to carry physical currency has now become a multi-billion-dollar industry. Basically, FinTech is a catch-all term used to describe software, mobile applications and other integrated technologies that improve and automate traditional […] The post The Future of FinTech and the Cloud appeared first on DevOps.com. View the full article

-

The Fintech Open Source Foundation builds on the success of FDC3, its most adopted open source project to date New York, NY – July 13, 2022 – The Fintech Open Source Foundation (FINOS), the financial services umbrella of the Linux Foundation, announced today during its Open Source in Finance Forum (OSFF) London the launch of FDC3 2.0. FDC3 supports efficient, streamlined desktop interoperability between financial institutions with enhanced connectivity capabilities. The global FDC3 community is fast-growing and includes application vendors, container vendors, a large presence from sell-side firms and a growing participation from buy-side firms all collaborating together on advancing the standard. You can check out all the community activity here: http://fdc3.finos.org/community The latest version of the standard delivers universal connectivity to the financial industry’s desktop applications with a significant evolution of all four parts of the Standard: the Desktop Agent API, the App Directory providing access to apps and the intent and context messages that they exchange. MAIN IMPROVEMENTS FDC3 2.0 significantly streamlines the API for both app developers and desktop agent vendors alike, refining the contract between these two groups based on the last three years’ working with FDC3 1.x. Desktop agents now support two-way data-flow between apps (both single transactions and data feeds), working with specific instances of apps and providing metadata on the source of messages – through an API that has been refined through feedback from across the FDC3 community. This updated version also redefines the concept of the “App Directory”, simplifying the API, greatly improving the App Record and the discoverability experience for users and making the App Directory fit-for-purpose for years to come (and the explosion of vendor interest FDC3 is currently experiencing). Finally, FDC3 2.0 includes a host of new standard intents and context, which define and standardize message exchanges for a range of very common workflows, including interop with CRMs, Communication apps (emails, calls, chats), data visualization tools, research apps and OMS/EMS/IMS systems. This is one of the most exciting developments as it represents diverse parts of the financial services software industry working together through the standard. MAIN USES Help Manage Information Overload. Finance is an information-dense environment. Typically, traders will use several different displays so that they can keep track of multiple information sources at once. FDC3 helps with this by sharing the “context” between multiple applications, so that they collectively track the topic the user is focused on. Work Faster. FDC3 standardizes a way to call actions and exchange data between applications (called “intents”). Applications can contribute intents to each other, extending each other’s functionality. Instead of the user copy-and-pasting bits of data from one application to another, FDC3 makes sure the intents have the data they need to seamlessly transition activity between applications. Platform-Agnostic. As an open standard, FDC3 can be implemented on any platform and in any language. All that is required is a “desktop agent” that supports the FDC3 standard, which is responsible for coordinating application interactions. FDC3 is successfully running on Web and Native platforms in financial institutions around the world. End the integration nightmare. By providing support for FDC3, vendors and financial organizations alike can avoid the bilateral or trilateral integration projects that plague desktop app roll-out, cause vendor lock-in and result in a slow pace of change on the Financial Services desktop. “It is very rewarding to see the recent community growth around FDC3,” said Jane Gavronsky, CTO of FINOS. “More and more diverse participants in the financial services ecosystem recognize the key role a standard such as FDC3 plays for achieving a true open financial services ecosystem. We are really excited about FDC3 2.0 and the potential for creating concrete, business-driven use cases that it enables.” What this means for the community “The wide adoption of the FDC3 standard shows the relevance of the work being conducted by FINOS. At Symphony we are supporters and promoters of this standard. This latest version, FDC3 2.0, and its improvements demonstrate substantial progress in this work and its growing importance to the financial services industry,” said Brad Levy, Symphony CEO. “The improvements to the App Directory and its ramifications for market participants and vendors are game-changing enough in themselves to demand attention from everyone: large sell-sides with large IT departments, slim asset managers who rely on vendor technology, and vendors themselves”, said Jim Bunting, Global Head of Partnerships, Cosaic. “FDC3 2.0 delivers many useful additions for software vendors and financial institutions alike. Glue42 continues to offer full support for FDC3 in its products. For me, the continued growth of the FDC3 community is the most exciting development”, said Leslie Spiro, CEO, Tik42/Glue42. “For example recent contributions led by Symphony, SinglePoint and others have helped to extend the common data contexts to cover chat and contacts; this makes FDC3 even more relevant and strengthens our founding goal of interop ‘without requiring prior knowledge between apps”. “Citi is a big supporter of FDC3 as it has allowed us to simplify how we create streamlined intelligent internal workflows, and partner with strategic clients to improve their overall experience by integrating each other’s services. The new FDC3 standard opens up even more opportunities for innovation between Citi and our clients,” said Amit Rai, Technology Head of Markets Digital & Enterprise Portal Framework at Citi. “FDC3 has allowed us to build interoperability within our internal application ecosystem in a way that will allow us to do the same with external applications as they start to incorporate these standards,” said Bhupesh Vora, European Head of Capital Markets Technology, Royal Bank of Canada. “The next evolution of FDC3 will ensure we continue to build richer context sharing capabilities with our internal applications and bring greater functionality to our strategic clients through integration with the financial application ecosystem for a more cohesive experience overall.” “Interoperability allows the Trading team to take control of their workflows, allowing them to reduce the time it takes to get to market. In addition they are able to generate alpha by being able to quickly sort vast, multiple sources of data,” said Carl James, Global Head of Fixed Income Trading, Pictet Asset Management. As FINOS sees continued growth and contribution to the FDC3 standard, the implementation of FDC3 2.0 will allow more leading financial institutions to take advantage of enhanced desktop interoperability. The contribution of continued updates also represents the overall wider adoption of open source technology, as reported in last year’s 2021 State of Open Source in Financial Services annual survey. To get involved in this year’s survey, visit https://www.research.net/r/ZN7JCDR to share key insights into the ever-growing open source landscape in financial services. Skill up on FDC3 by taking the free Linux Foundation’s FDC3 training course, or contact us at https://www.finos.org/contact-us. Hear from Kris West, Principal Engineer at Cosaic and Lead Maintainer of FDC3 on the FINOS Open Source in Finance Podcast, where he discusses why it was important to change the FDC3 standard in order to keep up with the growing amount of use cases end users are contributing to the community. About FINOS FINOS (The Fintech Open Source Foundation) is a nonprofit whose mission is to foster adoption of open source, open standards and collaborative software development practices in financial services. It is the center for open source developers and the financial services industry to build new technology projects that have a lasting impact on business operations. As a regulatory compliant platform, the foundation enables developers from these competing organizations to collaborate on projects with a strong propensity for mutualization. It has enabled codebase contributions from both the buy- and sell-side firms and counts over 50 major financial institutions, fintechs and technology consultancies as part of its membership. FINOS is also part of the Linux Foundation, the largest shared technology organization in the world. The post FDC3 2.0 Drives Desktop Interoperability Across the Financial Services Ecosystem appeared first on Linux Foundation. View the full article

-

Industry leaders and experts across financial services, technology and open source will come together for thought-provoking insights and conversations about how to best leverage open source software to solve industry challenges. SAN FRANCISCO, June 29, 2022 — The Linux Foundation, the nonprofit organization enabling mass innovation through open source, and co-host the Fintech Open Source… Source The post The Linux Foundation and Fintech Open Source Foundation Announce New Keynote Speakers for Open Source in Finance Forum London 2022 appeared first on Linux.com. View the full article

-

- linux foundation

- linux

-

(and 3 more)

Tagged with:

-

fintech HSBC to train employee in fintech

DevOps Online posted a topic in DevOps & SRE General Discussion

HSBC has recently announced that it would give employees training in fintech. Indeed, with all the digital changes in banks, it is vital that employees are able to keep pace with customer demand for fintech-based products and services and improve efficiency and effectiveness. The bank is thus offering training at the University of Oxford’s Saïd Business School to learn about emerging technologies such as cryptocurrencies and blockchain. This Fintech 101 education program will provide foundation training on fintech technologies and how to use them. Employees will then learn about digital disruption and platforms as well as artificial intelligence and open banking. This opportunity will help employees gain a better knowledge of the fintech economy and leverage technology and develop new digital products and services for their customers. The post HSBC to train employee in fintech appeared first on DevOps Online. View the full article -

In this week’s The Long View: IPv6 gets stabbed in the back, Fintech firms’ valuations are falling, and the latest browser war seems to be over—Firefox lost. View the full article

-

Industry experts will gather to discuss the most cutting edge topics at the cross section of finance, open source and technology, revealing recent developments and the direction of open source in financial services. SAN FRANCISCO, May 19, 2022 — The Linux Foundation, the nonprofit organization enabling mass innovation through open source, and co-host Fintech Open Source Foundation (FINOS)… Source The post The Linux Foundation and Fintech Open Source Foundation Announce the Schedule for Open Source in Finance Forum London 2022, July 13 appeared first on Linux.com. View the full article

-

- linux foundation

- fintech

-

(and 2 more)

Tagged with:

-

Our host, Shawn Ahmed talks about the future of Fintech/ Financial Services with Chris Skinner. It is said that every child in technology is trained by their parent to be one generation out of date. How true is this statement? Writing a cheque to be paid into account to getting paid in bitcoins, there is a whole generational shift that has happened over the last decade in terms of how we have evolved in our approach to FinTech. Chris Skinner is one of the most influential people in technology. He is an independent commentator on the financial markets and fintech through his blog, the Finanser.com, which is updated daily and as a best-selling author. His latest book, Digital for Good, focuses on how technology and finance can work together to address the environmental and social issues we face today and make a better world. Here is his perspective on what the future holds for Financial Sector. View the full article

-

The FinTech space has grown dramatically this year as more consumers shift their financial transactions online due to the COVID-19 pandemic. Financial services companies are learning how to ship better code, faster by adopting DevOps methodologies. DevOps helps companies develop fast, fail fast and learn fast so they can fulfill customer expectations and deliver quality […] The post FinConDX: The State of DevOps in FinTech appeared first on DevOps.com. View the full article

-

All industries are feeling the pain of preventing rising risks to their applications, private information and customers’ data, and it is not surprising that this is especially true in the financial services sector. In fact, fintech companies on average spent more than $18 million in 2019 battling cybercrime, while other markets’ companies spend an average […] The post How to Secure Fintech Applications and Protect Customer Data appeared first on DevOps.com. View the full article

-

Global industry leaders and experts across financial services, technology and open source will come together virtually for thought-provoking insights and conversations about how to best leverage open source software to solve industry challenges. SAN FRANCISCO, October 13, 2020 — The Linux Foundation, the nonprofit organization enabling mass innovation through open source, along with co-host Fintech Open Source Foundation (FINOS), a nonprofit whose mission is to accelerate adoption of open source software, standards and best practices in financial services, today announced initial keynote speakers for Open Source Strategy Forum (OSSF). The event takes place virtually November 12 – 13 in the Eastern Standard Time (EST), UTC−05:00. The schedule can be viewed here and the keynote speakers can be viewed here. The post The Linux Foundation and Fintech Open Source Foundation Announce Keynote Speakers for Open Source Strategy Forum 2020 appeared first on The Linux Foundation. View the full article

- 1 reply

-

- linux

- linux foundation

-

(and 2 more)

Tagged with:

-

The future of Fintech infrastructure is hybrid multi-cloud. Using private and public cloud infrastructure at the same time allows financial institutions to optimise their CapEx and OpEx costs. Why Private clouds? A private cloud is an integral part of a hybrid multi-cloud strategy for financial services organisations. It enables financial institutions to derive competitive advantage from agile implementations without incurring the security and business risks of a public cloud. Private clouds provide a more stable solution for financial institutions by dedicating exclusive hardware within financial firms’ own data centres. Private clouds also enable financial institutions to move from a traditional IT engagement model to a DevOps model and transform their IT groups from an infrastructure provider to a service provider (via a SaaS model). OpenStack for financial services OpenStack provides a complete ecosystem for building private clouds. Built from multiple sub-projects as a modular system, OpenStack allows financial institutions to build out a scalable private (or hybrid) cloud architecture that is based on open standards. OpenStack enables application portability among private and public clouds, allowing financial institutions to choose the best cloud for their applications and workflows at any time, without lock-in. It can also be integrated with a variety of key business systems such as Active Directory and LDAP. OpenStack software provides a solution for delivering infrastructure as a service (IaaS) to end users through a web portal and provides a foundation for layering on additional cloud management tools. These tools can be used to implement higher levels of automation and to integrate analytics-driven management applications for optimising cost, utilisation and service levels. OpenStack software provides support for improving service levels across all workloads and for taking advantage of the high availability capabilities built into cloud aware applications. In the world of Open Banking, the delivery of a financial application or digital customer service often depends on many contributors from various organisations working collaboratively to deliver results. Large financial institutions – the likes of PayPal and Wells Fargo are using OpenStack for their private cloud builds. These companies are successfully leveraging the capabilities of OpenStack software that enables efficient resource pooling, elastic scalability and self-service provisioning for end users. The Challenge The biggest challenge of OpenStack is everyday operations automation, year after year, while OpenStack continues to evolve rapidly. The Solution – Ops Automation Canonical solves this problem with total automation that decouples architectural choices from the operations codebase that supports upgrades, scaling, integration and bare metal provisioning. From bare metal to cloud control plane, Canonical’s Charmed OpenStack uses automation everywhere leveraging model-driven operations. Charmed OpenStack Charmed OpenStack is an enterprise grade OpenStack distribution that leverages MAAS, Juju, and the OpenStack charmed operators to simplify the deployment and management of an OpenStack cloud. Canonical’s Charmed OpenStack ensures private cloud price-performance, providing full automation around OpenStack deployments and operations. Together with Ubuntu, it meets the highest security, stability and quality standards in the industry. Benefits of Charmed OpenStack for fintechs Secure, compliant, hardened Canonical provides up to ten years of security updates for Charmed OpenStack under the UA-I subscription for customers who value stability above all else. Moreover, the support package includes various EU and US regulatory compliance options. Additional hardening tools and benchmarks ensure the highest level of security. Every OpenStack version supported Each upstream OpenStack version comes with new features that may bring measurable benefits to your business. We recognise that and provide full support for every version of OpenStack within two weeks of the upstream release. Every two years we release an LTS version of Charmed OpenStack which we support for five years. Upgrades included, fully automated OpenStack upgrades are known to be painful due to the complexity of the process. By leveraging the model-driven architecture and using OpenStack Charms for automation purposes, Charmed OpenStack can be easily upgraded between its consecutive versions. This allows you to stay up to date with the upstream features, while not putting additional pressure on your operations team. A case in point The client: SBI BITS SBI BITS provides IT services and infrastructure to SBI Group companies and affiliates. SBI Group is Japan’s market-leading financial services company group headquartered in Tokyo. When public cloud is not an option Operating in the highly regulated financial services industry, we need complete control over our data. If our infrastructure isn’t on-premise, it makes regulatory compliance far more complicated. Georgi Georgiev, CIO at SBI BITS The challenge With hundreds of affiliate companies relying on it for IT services, SBI BITS – the FinTech arm of SBI Group was under immense pressure to make its infrastructure available simultaneously to numerous internal clients, often with critically short time to market requirements. The solution Canonical designed and built the initial OpenStack deployment within a few weeks, and is now providing ongoing maintenance through the Ubuntu Advantage for Infrastructure enterprise support package. The initial implementation consisted of 73 nodes each at two sites, deployed as hyper-converged infrastructure and running Ubuntu 18.04. This architecture enables a software-defined approach that unlocks greater automation and more efficient resource utilisation, leading to significant cost savings. The outcome Canonical’s OpenStack deployment has streamlined the infrastructure delivery, ensuring that the company can meet the IT needs of SBI Group without the stress. Automation eliminates the majority of physical work involved in resource provisioning. Canonical delivered OpenStack at one third of the price of competing proposals. Hyper-converged architecture and full-stack support seeks to deliver both CAPEX and OPEX savings. Canonical’s solution was a third of the price of the other proposals we’d received. The solution is also proving to be highly cost-effective, both from CAPEX and OPEX perspectives. Georgi Georgiev, CIO at SBI BITS Execute your hybrid cloud strategy OpenStack gives financial institutions the ability to seamlessly move workloads from one cloud to another, whether private or public. It also accelerates time-to-market by giving a financial institutions’ business units, a self-service portal to access necessary resources on-demand, and an API driven platform for developing cloud-aware apps. OpenStack is a growing software ecosystem consisting of various interconnected components. Therefore, its operations can at times be challenging even in a fully automated environment. Canonical recognises that and offers fully managed services for organisations. Canonical’s managed OpenStack provides 24×7 cloud monitoring, daily maintenance, regular software updates, OpenStack upgrades and more. We are always here to discuss your cloud computing needs and to help you successfully execute your hybrid cloud strategy. Get in touch View the full article

-

The future of Fintech infrastructure is hybrid multi-cloud. Using private and public cloud infrastructure at the same time allows financial institutions to optimise their CapEx and OpEx costs. Why Private clouds? A private cloud is an integral part of a hybrid multi-cloud strategy for financial services organisations. It enables financial institutions to derive competitive advantage from agile implementations without incurring the security and business risks of a public cloud. Private clouds provide a more stable solution for financial institutions by dedicating exclusive hardware within financial firms’ own data centres. Private clouds also enable financial institutions to move from a traditional IT engagement model to a DevOps model and transform their IT groups from an infrastructure provider to a service provider (via a SaaS model). OpenStack for financial services OpenStack provides a complete ecosystem for building private clouds. Built from multiple sub-projects as a modular system, OpenStack allows financial institutions to build out a scalable private (or hybrid) cloud architecture that is based on open standards. OpenStack enables application portability among private and public clouds, allowing financial institutions to choose the best cloud for their applications and workflows at any time, without lock-in. It can also be integrated with a variety of key business systems such as Active Directory and LDAP. OpenStack software provides a solution for delivering infrastructure as a service (IaaS) to end users through a web portal and provides a foundation for layering on additional cloud management tools. These tools can be used to implement higher levels of automation and to integrate analytics-driven management applications for optimising cost, utilisation and service levels. OpenStack software provides support for improving service levels across all workloads and for taking advantage of the high availability capabilities built into cloud aware applications. In the world of Open Banking, the delivery of a financial application or digital customer service often depends on many contributors from various organisations working collaboratively to deliver results. Large financial institutions – the likes of PayPal and Wells Fargo are using OpenStack for their private cloud builds. These companies are successfully leveraging the capabilities of OpenStack software that enables efficient resource pooling, elastic scalability and self-service provisioning for end users. The Challenge The biggest challenge of OpenStack is everyday operations automation, year after year, while OpenStack continues to evolve rapidly. The Solution – Ops Automation Canonical solves this problem with total automation that decouples architectural choices from the operations codebase that supports upgrades, scaling, integration and bare metal provisioning. From bare metal to cloud control plane, Canonical’s Charmed OpenStack uses automation everywhere leveraging model-driven operations. Charmed OpenStack Charmed OpenStack is an enterprise grade OpenStack distribution that leverages MAAS, Juju, and the OpenStack charmed operators to simplify the deployment and management of an OpenStack cloud. Canonical’s Charmed OpenStack ensures private cloud price-performance, providing full automation around OpenStack deployments and operations. Together with Ubuntu, it meets the highest security, stability and quality standards in the industry. Benefits of Charmed OpenStack for fintechs Secure, compliant, hardened Canonical provides up to ten years of security updates for Charmed OpenStack under the UA-I subscription for customers who value stability above all else. Moreover, the support package includes various EU and US regulatory compliance options. Additional hardening tools and benchmarks ensure the highest level of security. Every OpenStack version supported Each upstream OpenStack version comes with new features that may bring measurable benefits to your business. We recognise that and provide full support for every version of OpenStack within two weeks of the upstream release. Every two years we release an LTS version of Charmed OpenStack which we support for five years. Upgrades included, fully automated OpenStack upgrades are known to be painful due to the complexity of the process. By leveraging the model-driven architecture and using OpenStack Charms for automation purposes, Charmed OpenStack can be easily upgraded between its consecutive versions. This allows you to stay up to date with the upstream features, while not putting additional pressure on your operations team. A case in point The client: SBI BITS SBI BITS provides IT services and infrastructure to SBI Group companies and affiliates. SBI Group is Japan’s market-leading financial services company group headquartered in Tokyo. When public cloud is not an option Operating in the highly regulated financial services industry, we need complete control over our data. If our infrastructure isn’t on-premise, it makes regulatory compliance far more complicated. Georgi Georgiev, CIO at SBI BITS The challenge With hundreds of affiliate companies relying on it for IT services, SBI BITS – the FinTech arm of SBI Group was under immense pressure to make its infrastructure available simultaneously to numerous internal clients, often with critically short time to market requirements. The solution Canonical designed and built the initial OpenStack deployment within a few weeks, and is now providing ongoing maintenance through the Ubuntu Advantage for Infrastructure enterprise support package. The initial implementation consisted of 73 nodes each at two sites, deployed as hyper-converged infrastructure and running Ubuntu 18.04. This architecture enables a software-defined approach that unlocks greater automation and more efficient resource utilisation, leading to significant cost savings. The outcome Canonical’s OpenStack deployment has streamlined the infrastructure delivery, ensuring that the company can meet the IT needs of SBI Group without the stress. Automation eliminates the majority of physical work involved in resource provisioning. Canonical delivered OpenStack at one third of the price of competing proposals. Hyper-converged architecture and full-stack support seeks to deliver both CAPEX and OPEX savings. Canonical’s solution was a third of the price of the other proposals we’d received. The solution is also proving to be highly cost-effective, both from CAPEX and OPEX perspectives. Georgi Georgiev, CIO at SBI BITS Execute your hybrid cloud strategy OpenStack gives financial institutions the ability to seamlessly move workloads from one cloud to another, whether private or public. It also accelerates time-to-market by giving a financial institutions’ business units, a self-service portal to access necessary resources on-demand, and an API driven platform for developing cloud-aware apps. OpenStack is a growing software ecosystem consisting of various interconnected components. Therefore, its operations can at times be challenging even in a fully automated environment. Canonical recognises that and offers fully managed services for organisations. Canonical’s managed OpenStack provides 24×7 cloud monitoring, daily maintenance, regular software updates, OpenStack upgrades and more. We are always here to discuss your cloud computing needs and to help you successfully execute your hybrid cloud strategy. Get in touch View the full article

-

The financial services (FS) industry is going through a period of change and disruption. Technology innovation has provided the means for financial institutions to reimagine the way in which they operate and interact with their customers, employees and the wider ecosystem. One significant area of development is the utilisation of artificial intelligence (AI) and machine learning (ML) which has the potential to positively transform the FS sector. The future of fintech AI/ML is now AI/ ML technology is helping fintechs and finservs to drive top line growth with smarter trading and better cross/upsell opportunities while at the same time improving the bottom line with better fraud detection and collections services. Leading financial firms are looking to capitalise on these trends and transform their businesses with an end-to-end AI strategy. AI/ ML is enabling firms to identify key insights from vast amounts of data, calculate risk, and automate routine tasks at unprecedented speed and scale utilising the power of GPU-based platforms. AI/ML on Ubuntu Ubuntu is the data professionals and software developers’ choice of Linux distro and is also the most popular operating system on public clouds. Ubuntu provides the platform to power fintech AI/ML – from developing AI/ML models on high-end Ubuntu workstations, to training those models on public clouds with hardware acceleration to deploying them to cloud, edge and IoT. GPU based AI/ML computing GPU computing is the use of a graphics processing unit (GPU) as a co-processor to accelerate CPUs for compute intensive processing. A central processing unit (CPU) usually consists of four to eight CPU cores, while the GPU consists of hundreds of smaller cores. The GPU accelerates applications running on the CPU by offloading some of the compute-intensive and time consuming portions of the code. The rest of the application still runs on the CPU. From a user’s perspective, the application runs faster because it’s using the massively parallel processing power of the GPU to boost performance also referred to as “hybrid” computing. This massively parallel architecture is what gives the GPU its high compute performance. AI/ ML application developers harness the performance of the parallel GPU architecture using a parallel programming model invented by NVIDIA called ‘CUDA’. To learn more about setting up a data science environment on Ubuntu using NVIDIA GPUs, RAPIDS and NGC Containers, read the following blog Ubuntu for machine learning with NVIDIA RAPIDS Fintech AI/ML use cases GPU-based AI/ ML computing has many use cases in financial services like real-time fraud detection, compliance, autonomous finance, back-end process automation, supply chain finance and improving customer experience to name a few. Autonomous finance Artificial intelligence (AI) and automation can help bridge the gap between customer expectations and what services financial firms can offer. Forrester defines autonomous finance as algorithm-driven financial services that make decisions or take action on a customer’s behalf. Autonomous finance uses artificial intelligence (AI) and automation to deliver personalised financial advice to customers. Robo-advisory services are algorithm based digital platforms that offer automated financial advice or investment management services and are built to calibrate a financial portfolio to the user’s goals and risk tolerance. In times of economic uncertainty, solutions to simplify financial decisions like automated microsavings tools can help consumers increase their savings. Machine Learning algorithms not only allow customers to track their spending on a daily basis using these apps but also help them analyze this data to identify their spending patterns, followed by identifying the areas where they can save. Combat financial crime Financial institutions are vulnerable to a wide range of risks, including cyber fraud, money laundering, and the financing of terrorism. In order to combat these threats, financial institutions undertake know-your-customer (KYC) and anti-money laundering (AML) compliance activities to meet regulatory requirement. AI-enabled compliance technology can reduce the cost for financial institutions to meet KYC requirements and decrease false positives generated in monitoring efforts by sifting through millions of transactions quickly to spot signs of crime, establish links, detect anomalies, and cross- check against external databases to establish identity using a diverse range of parameters. Credit card fraud detection is one of the most successful applications of ML. Banks are equipped with monitoring systems that are trained on very large datasets of credit card transaction data and historical payments data. Classification algorithms can label events as “fraud” versus “non fraud” and fraudulent transactions can then be stopped in real time. Many financial firms are exploring AI-based fraud prevention alternatives by building intelligent decisioning systems that derive patterns from historical shopping and spending behaviour of customers to establish a baseline which is then used to compare and score each new customer transaction. Improved credit decisions Lenders and credit ratings agencies routinely analyse data to establish the creditworthiness of potential borrowers. Traditional data used to generate credit scores include formal identification, bank transactions, credit history, income statements, and asset value. AI can help lenders and credit-rating institutions assess a consumer’s behaviour and verify their ability to repay a loan. Supply chain finance The scope and complexity of supply chains is growing fast and the relatively high cost of assessing firm creditworthiness and meeting KYC and AML requirements results in a huge trade finance gap. AI/ML has the potential to help bridge this trade finance gap. Originators of supply chain finance now have access to a greater wealth of data about the behavior and financial health of supply chain participants. Machine learning algorithms can be applied to these alternative data- points—records of production, sales, making payments on time, performance, shipments, cancelled orders, and chargebacks to create tailored financing solutions, assess credit risk, help predict fraud and detect supply chain threats in real time and cost-effectively. Enhanced customer experience Advances in NLP (Natural Language Processing) mean that AI can be leveraged to provide a conversational interface with users, promising to disrupt the way customer services are delivered. Conversational AI is enabling consumers to manage all types of financial transactions, from bill payments and money transfers to opening new accounts. By offering these self-service interactions, financial firms can free customer service agents to focus on higher-value interactions and transactions. At the heart of conversational AI are deep learning models that require significant computing power to train chatbots to communicate in the domain-specific language of financial services. Wrapping it up In recent years, AI/ML technology has enabled development of various innovative applications in the global financial services industry. The availability of big data, GPU hardware, parallel programming models and availability of elastic and scalable compute have been key drivers of the latest AI innovation wave. If you are a financial institution that is embracing AI/ML to improve data-backed decisions, risk management and customer experiences, Ubuntu can be the common denominator in your AI journey from on-prem to cloud to edge. Get in touch Pic by Markus Winkler on Unsplash View the full article

-

Editor’s note: Here we take a look at how Branch, a fintech startup, built their data platform with BigQuery and other Google Cloud solutions that democratized data for their analysts and scientists. As a startup in the fintech sector, Branch helps redefine the future of work by building innovative, simple-to-use tech solutions. We’re an employer payments platform, helping businesses provide faster pay and fee-free digital banking to their employees. As head of the Behavioral and Data Science team, I was tapped last year to build out Branch’s team and data platform. I brought my enthusiasm for Google Cloud and its easy-to-use solutions to the first day on the job. We chose Google Cloud for ease-of-use, data & savings I had worked with Google Cloud previously, and one of the primary mandates from our CTO was “Google Cloud-first,” with the larger goal of simplifying unnecessary complexity in the system architecture and controlling the costs associated with being on multiple cloud platforms. From the start, Google Cloud’s suite of solutions supported my vision of how to design a data team. There’s no one-size-fits-all approach. It starts with asking questions: what does Branch need? Which stage are we at? Will we be distributed or centralized? But above all, what parameters in the product will need to be optimized with analytics and data science approaches? With team design, product parameterization is critical. With a product-driven company, the data science team can be most effective by tuning a product’s parameters—for example, a recommendation engine for an ecommerce site is driven by algorithms and underlying models that are updating parameters. “Show X to this type of person but Y to this type of person,” X and Y are the parameters optimized by modeling behavioral patterns. Data scientists behind the scenes can run models as to how that engine should work, and determine which changes are needed. By focusing on tuning parameters, the team is designed around determining and optimizing an objective function. That of course relies heavily on the data behind it. How do we label the outcome variable? Is a whole labeling service required? Is it clean data with a pipeline that won’t require a lot of engineering work? What data augmentation will be needed? With that data science team design envisioned, I started by focusing on user behavior—deciding how to monitor and track it, how to partner with the product team to ensure it’s in line with the product objectives, then spinning up A/B testing and monitoring. On the optimization side, transaction monitoring is critical in fintech. We need to look for low-probability events and abnormal patterns in the data, and then take action, either reaching out to the user as quickly as possible to inform them, or stopping the transaction directly. In the design phase, we need to determine if these actions need to be done in real-time or after the fact. Is it useful to the user to have that information in real time? For example, if we are working to encourage engagement, and we miss an event or an interaction, it’s not the end of the world. It’s different with a fraud monitoring system, for which you’ve got to be much more strict about real-time notifications. Our data infrastructure There are many use cases at Branch for data cloud technologies from Google Cloud. One is with “basic” data work. It’s been incredibly easy to use BigQuery, Google’s serverless data warehouse, which is where we’ve replicated all of our SQL databases, and Cloud Scheduler, the fully managed enterprise-grade cron job scheduler. These two tools, working together, make it easy to organize data pipelining. And because of their deep integration, they play well with other Google Cloud solutions like Cloud Composer and Dataform, as well as with services, like Airflow, from other providers. Especially for us as a startup, the whole Google Cloud suite of products accelerates the process of getting established and up and running, so we can perform the “bread-and-butter” work of data science. We also use BigQuery as a holder of heavier stats, and we train our models there, weekly, monthly, nightly, depending on how much data we collect. Then we leverage the messaging and ingestion tool Pub/Sub and its event systems to get the response in real time. We evaluate the output for that model in a Dataproc cluster or Dataform, and run all of that in Python notebooks, which can call out to BigQuery to train a model, or get evaluated and pass the event system through. Full integration of data solutions At the next level, you need to push data out to your internal teams. We are growing and evolving, so I looked for ways to save on costs during this transition. We do a heavy amount of work in Google Sheets because it integrates well with other Google services, getting data and visuals out to the people who need them; enabling them to access raw data and refresh as needed. Google Groups also makes it easy to restrict access to data tables, which is a vital concern in the fintech space. The infrastructure management and integration of Google Groups make it super useful. If an employee departs the organization, we can easily delete or control their level of access. We can add new employees to a group that has a certain level of rights, or read and write access to the underlying databases. As we grow with Google Cloud, I also envision being able to track the user levels, including who’s running which SQLs and who’s straining the database and raising our costs. A streamlined data science team saves costs I’d estimate that Google Cloud’s solutions have saved us the equivalent of one full-time engineer we’d otherwise need to hire to link the various tools together, making sure that they are functional and adding more monitoring. Because of the fully managed features of many of Google Cloud’s products, that work is done for us, and we can focus on expanding our customer products. We’re now 100% Google Cloud for all production systems, having consolidated from IBM, AWS, and other cloud point solutions. For example, Branch is now expanding financial wellness offerings for our customers to encourage better financial behavior through transaction monitoring, forecasting their spend and deposits, and notifying them of risks or anomalies. With those products and others, we’ll be using and benefiting from the speed, scalability, and ease of use of Google Cloud solutions, where they always keep data—and data teams—top of mind. Learn more about Branch. Curious about other use cases for BigQuery? Read how retailers can use BigQuery ML to create demand forecasting models. Related Article Inventory management with BigQuery and Cloud Run Building a simple inventory management system with Cloud Run and BigQuery Read Article

-

Forum Statistics

43.9k

Total Topics43.4k

Total Posts

.png.6dd3056f38e93712a18d153891e8e0fc.png.1dbd1e5f05de09e66333e631e3342b83.png.933f4dc78ef5a5d2971934bd41ead8a1.png)

.thumb.jpg.10b3e13237872a9a7639ecfbc2152517.jpg)