Search the Community

Showing results for tags 'data centers'.

-

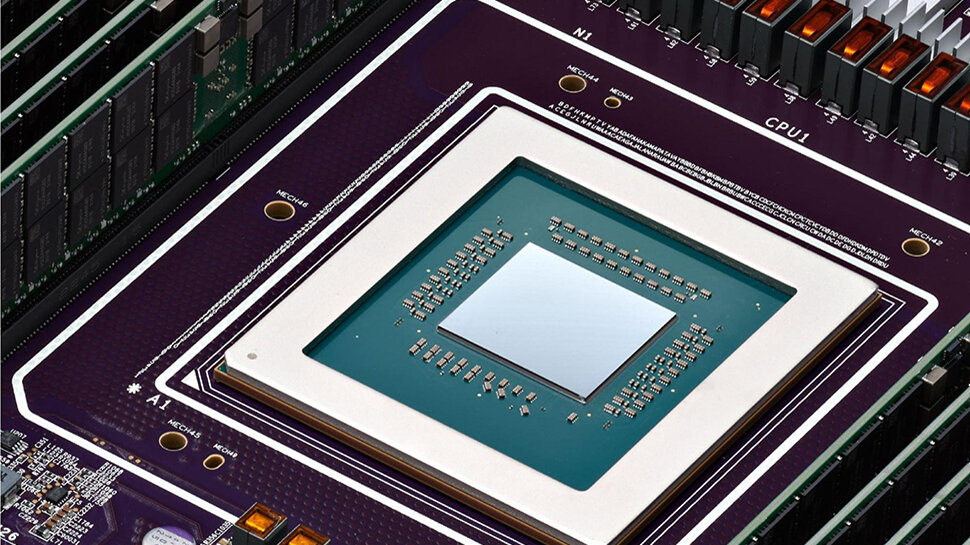

Microsoft and OpenAI are reportedly in the process of planning a groundbreaking data center project which would include an AI supercomputer named "Stargate”. A report by Anissa Gardizy and Amir Efrati in The Information claims the goal of the project, which would be financed by Microsoft to the tune of over $100 billion, and which reportedly has a launch date set for 2028, is to reduce the two companies' reliance on Nvidia, something that a lot of the tech giants involved in AI are increasingly looking to try to do. Microsoft and OpenAI’s plan reportedly involves five phases, with Stargate being the fifth and most ambitious one. The data center will be the supercomputer The cost of the project is attributed to the age-old “sources familiar with the plans" (The Information says these are “a person who spoke to OpenAI CEO Sam Altman about it and a person who has viewed some of Microsoft’s initial cost estimates”), but neither Microsoft nor OpenAI have yet commented on the specifics of the project. The new data center project is expected to push the boundaries of AI capability and could potentially exceed $115 billion in expenses. This is more than triple the amount Microsoft spent on capital expenditures for servers and equipment last year. Microsoft is currently working on a smaller, fourth-phase supercomputer for OpenAI that is expected to launch around 2026, The Information claims. Shedding more light on the report, The Next Platform says, “The first thing to note about the rumored “Stargate” system that Microsoft is planning to build to support the computational needs of its large language model partner, OpenAI, is that the people doing the talking – reportedly OpenAI chief executive officer Sam Altman – are talking about a data center, not a supercomputer. And that is because the data center – and perhaps multiple data centers within a region with perhaps as many as 1 million XPU computational devices – will be the supercomputer.” The Next Platform also says if Stargate does come to fruition it will be “based on future generations of Cobalt Arm server processors and Maia XPUs, with Ethernet scaling to hundreds of thousands to 1 million XPUs in a single machine,” and it definitely won't be based on Nvidia GPUs and interconnects, which seems like a safe bet if the rumors are to be believed. More from TechRadar Pro OpenAI looking to build an AI chip empire worth more than AMD, Nvidia and intelOpenAI says 2024 is the "year of the enterprise" when it comes to AIMicrosoft announces new AI hub in London View the full article

-

Google revealed its first custom Arm-based CPUs for data centers at its Google Cloud Next 24 event. The new Google Axion processors are intended for general-purpose workloads such as web and app servers, containerized microservices, open-source databases, and so on. The company’s investment in custom silicon dates back to 2015 when the tech behemoth launched its first Tensor Processing Units (TPU). Google has also developed its own Video Coding Unit (VCU) and Tensor chips for mobile devices. A significant milestone Google's main rivals for cloud services, Amazon and Microsoft, have their own CPUs based on Arm technology, but Amin Vahdat, Google's vice president of machine learning, systems and cloud AI boasted, "Axion processors combine Google’s silicon expertise with Arm’s highest performing CPU cores to deliver instances with up to 30% better performance than the fastest general-purpose Arm-based instances available in the cloud today." Axion CPUs will also have "up to 50% better performance and up to 60% better energy-efficiency than comparable current-generation x86-based instances," Vahdat added. Built using the Arm Neoverse V2 CPU and on the standard Armv9 architecture and instruction set, the new processors are underpinned by Titanium, a system of custom silicon microcontrollers and tiered scale-out offloads designed to optimize performance for customer workloads. "Google’s announcement of the new Axion CPU marks a significant milestone in delivering custom silicon that is optimized for Google’s infrastructure, and built on our high-performance Arm Neoverse V2 platform," Arm CEO Rene Haas said. "Decades of ecosystem investment, combined with Google’s ongoing innovation and open-source software contributions ensure the best experience for the workloads that matter most to customers running on Arm everywhere." The contributions to the Arm ecosystem that Haas mentioned include open-sourcing Android, Kubernetes, TensorFlow, and the Go language, and should pave the way for Axion's application compatibility and interoperability. Google says customers will be able to seamlessly deploy Arm workloads on Google Cloud with limited code rewrites, accessing an ecosystem of cloud customers and software developers leveraging Arm-native software. The new Axion processors will be available to Google Cloud customers later this year. Virtual machines based on the CPUs will be available in preview in the coming months. More from TechRadar Pro Google wants to bring AI-powered security to businesses everywhereGoogle is giving your security teams a major Gemini AI boostGoogle Vids is Workspace's new video creation app for your workplace View the full article

-

- data centers

- (and 4 more)

-

Amazon Web Services (AWS) has secured a nuclear-powered data center campus as part of a $650 million agreement with Texas-based electricity generation and transmission company Talen Energy. AWS’s acquisition includes the Cumulus data center complex, situated next to Talen’s 2.5 gigawatt Susquehanna nuclear power plant in the northwest of Pennsylvania. Cumulus, which claimed a 48-megawatt capacity when it opened last year, was already on track to expand nearly tenfold to 475 megawatts under Talen’s ownership. The Amazon takeover could spell out even more growth as the cloud giant looks to power its data centers with cleaner energy sources. AWS nuclear-powered data center Amazon’s payments are set to be staggered, with a $350 million lump sum due upon deal closure and a further $300 million awarded upon the completion of certain development milestones set to take place this year. As part of the agreement, Talen will continue to supply Amazon with direct access to power produced by its Susquehanna nuclear power plant, which could reach heights of 960 megawatts in the coming years. While nuclear energy may be a controversial power source, AWS’s acquisition aligns with its commitment to carbon-free and renewable energy sources. Data centers are already being scrutinized for their intensive energy and natural resource consumption, and AWS has plenty of them dotted all over the world. Amazon has also been busy snapping up other green energy opportunities, such as the Oregon-based wind farm that it signed a power purchase agreement for last month. Moreover, the substantial move could be one that keeps it ahead of key rivals. The likes of Microsoft and Google have also been busy transitioning to clean energy in recent months in a bid to reduce the environmental burden of data centers, with nuclear, wind, solar and geothermal plants all being considered. More from TechRadar Pro These are the best cloud hosting providersMicrosoft could be planning to run future data centers with nuclear powerCheck out our roundup of the best cloud storage and best cloud backup tools View the full article

-

Data centres are going through a transformation. Gradually, we will see a new type of equipment attached to servers in almost every data centre: smartNICs are here. They will be the enablers of converged data centres where common infrastructure tasks are offloaded from a host server to attached network interface cards (NIC). Think of it this way: host servers will only run the applications that drive the actual return on investment. Operating the infrastructure itself will be the task of a fleet of smartNICs, effectively helping out the servers with the necessary networking, storage, and security tasks, among many others. In other words, data centres will do what they are actually supposed to do: run applications. The overhead will be offloaded, driving efficiency... View the full article

-

- data centers

- nic

-

(and 1 more)

Tagged with:

-

Gartner Inc. forecasts that global data center infrastructure spending will increase by 6% in 2021. The COVID pandemic caused restricted cash flow, which significantly decreased data center spending in 2020. Despite the decline this year, Gartner predicts that the data center market will grow year-on-year till 2024. View the full article

-

Forum Statistics

42.9k

Total Topics42.3k

Total Posts

.png.6dd3056f38e93712a18d153891e8e0fc.png.1dbd1e5f05de09e66333e631e3342b83.png.933f4dc78ef5a5d2971934bd41ead8a1.png)